Resources & Datasets:

Src Example of Reinforced Learning for Auto-Navigation

This is a tentative to explore reinforced learning using OpenAI to train our agent to perform auto-navigation. We propose in this implementation a new autonomous driving environment for the agent to learn appropriate behavior in a structured environment. Furthermore, the proposed architecture introduces an upgrade in the model for training the agent with additional functionality for creating an environment that looks like the real world. The main objective of this work is to train an agent that avoids crashes and considers safety as a priority one.

Src NetCalib Ver2.0

We are happy to announce that our work on sensors autocalibration based on deep learning has led to an upgrade of our approach NetCalib. As a consequence, NetCalib outperformed the state-of-the-art techniques. If you are interested in the trained model or the implementation, please click on the GitHub icon.

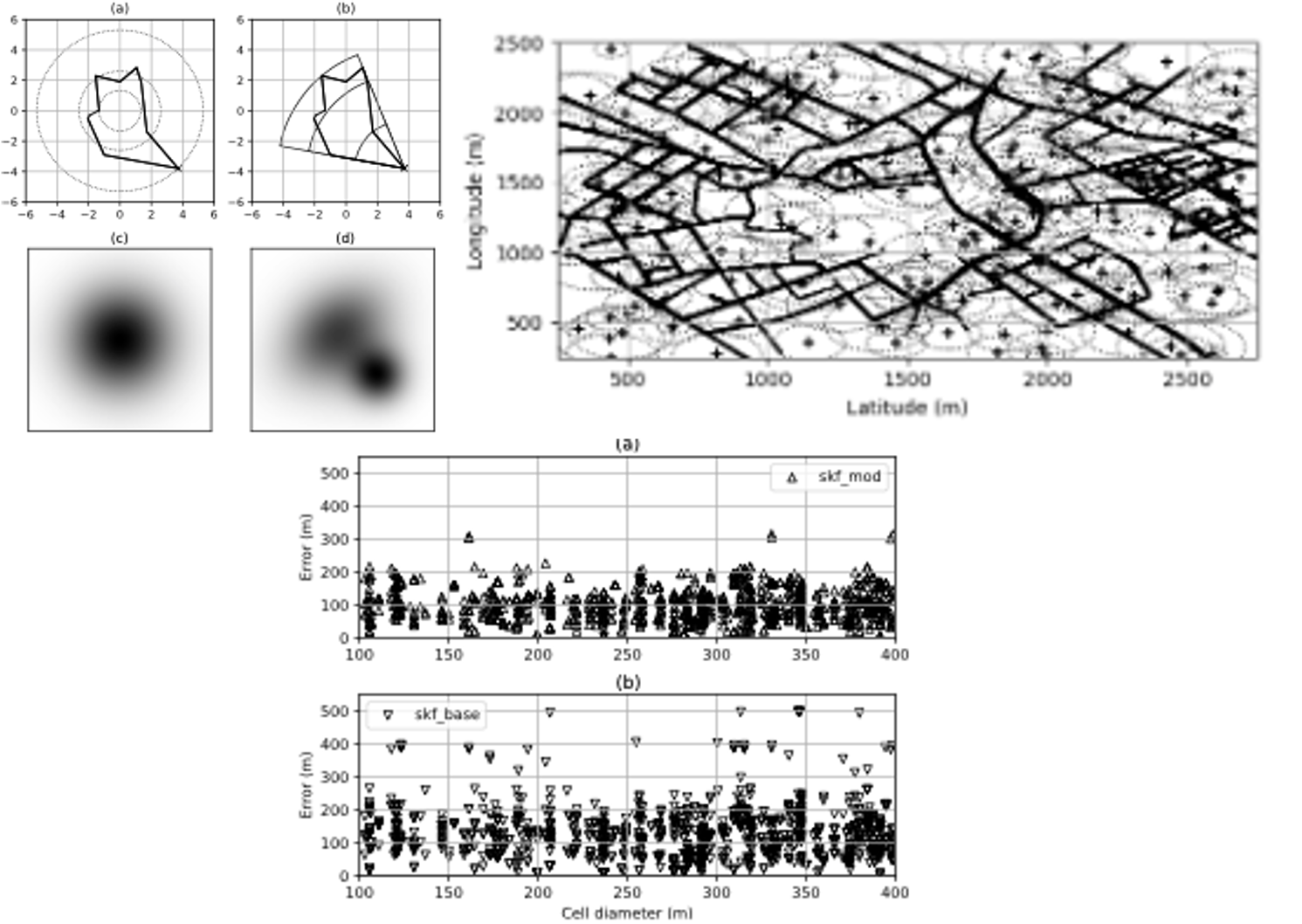

Src Switching Kalman Filter and Coverage Optimization Method

Our work on exploring the potential behind using CDR data for mobility and transport application has led us to explore if we can transform the extracted trajectories to something like GPS traces. During this journey, we noticed that to increase the accuracy of our positioning of the mobile device; we needed to have a good representation of the antenna’s coverage area. Hence, we are happy to share our implementation of an optimized coverage area representation and switching Kalman filter for mobile positioning.

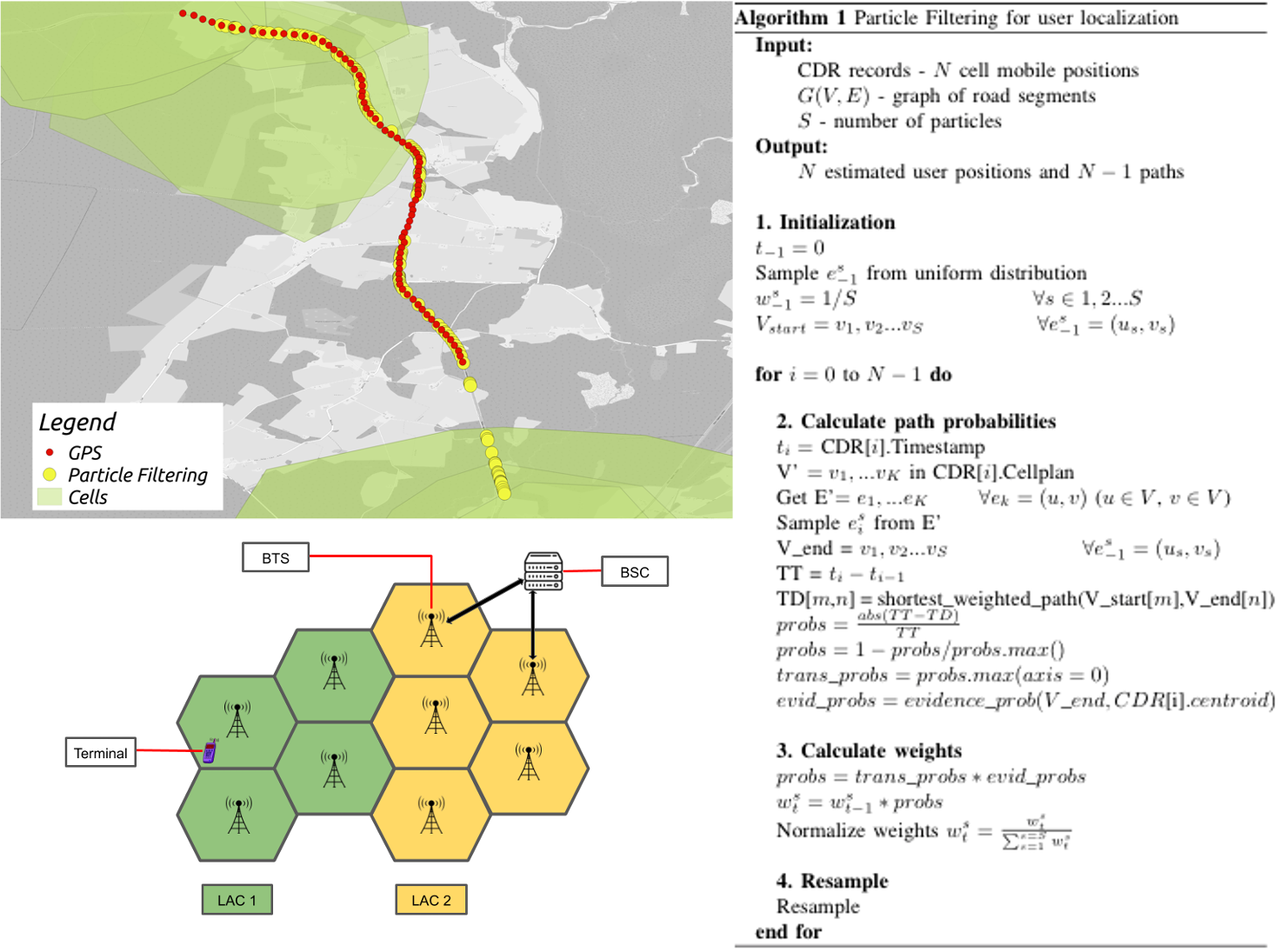

Src Particle Filter for Trajectory Reconstruction and Mobile Positioning

This is an implementation of Particle filter as a non-linear approach for performing mobile positioning and trajectory reconstruction using Mobile network data (such as CDR and/or VLR data). Our goal is to evaluate if this nonlinear method can out-perform the existent linear methods like Switching Kalman Filter.

Src NetCalib Ver1.0

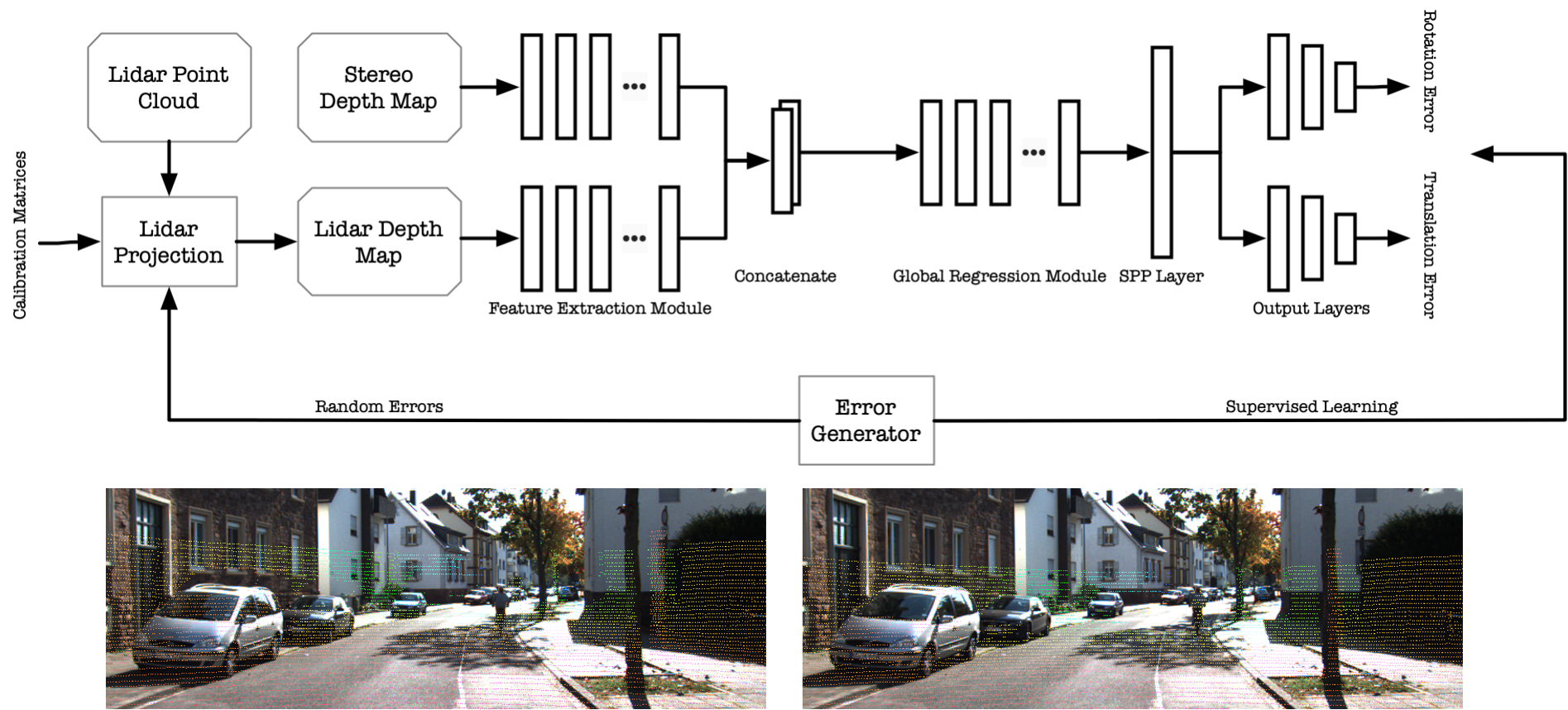

NetCalib is a deep neural network method for automatically finding the geometric transformation between LiDAR and cameras to calibrate the two sensors. For example, by calculating the depth map from the cameras and another depth map by projecting the LiDAR point cloud to the image's frame, the calibration model could find the geometric errors between two depth maps. The model consists of two feature extraction networks, one global regression network, one spatial pyramid pooling layer, and two output modules. A feature extraction network is a preprocessing step for the inputs and outputs high-level compact representations of the depth maps. Global regression network learns geometry between two modalities, then the SPP layer unifies the feature size, and those features denote the geometry relationships of the sensor modalities. Finally, the output module takes the processed features and estimates de-calibration errors.

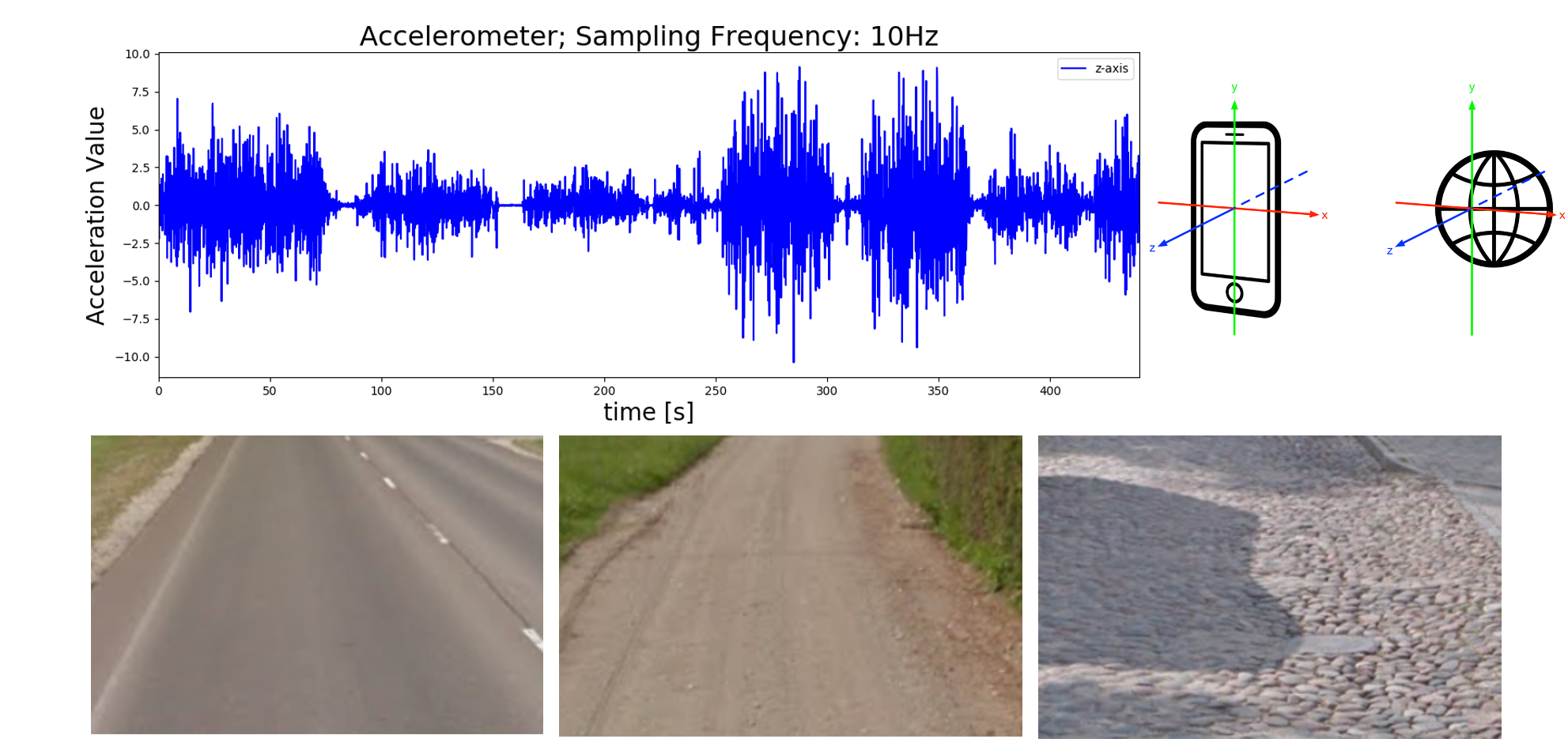

Data Accelorometer Dataset for Road Recognition

The accelerometer data is collected from a collector app installed in an Android smartphone. There are three sensors used in the app: accelerometer, gyroscope, and GPS, where all sensors used the same sampling rate at 10 Hz (10 records in every second). In the end, the collected data will be written in CSV format, and the information is described below:

- Timestamp: It is recorded in milliseconds, starts from 00:00:00.0, 1st of January 1970 UTC.

- Raw Data: Raw accelerometer data for X, Y, Z axes, respectively. It is raw sensor measurements from the Android API.

- Rectified Data: This accelerometer data is virtually transformed from the phone's coordinate frame to the world coordinate frame (Virtually oriented accelerometer data).

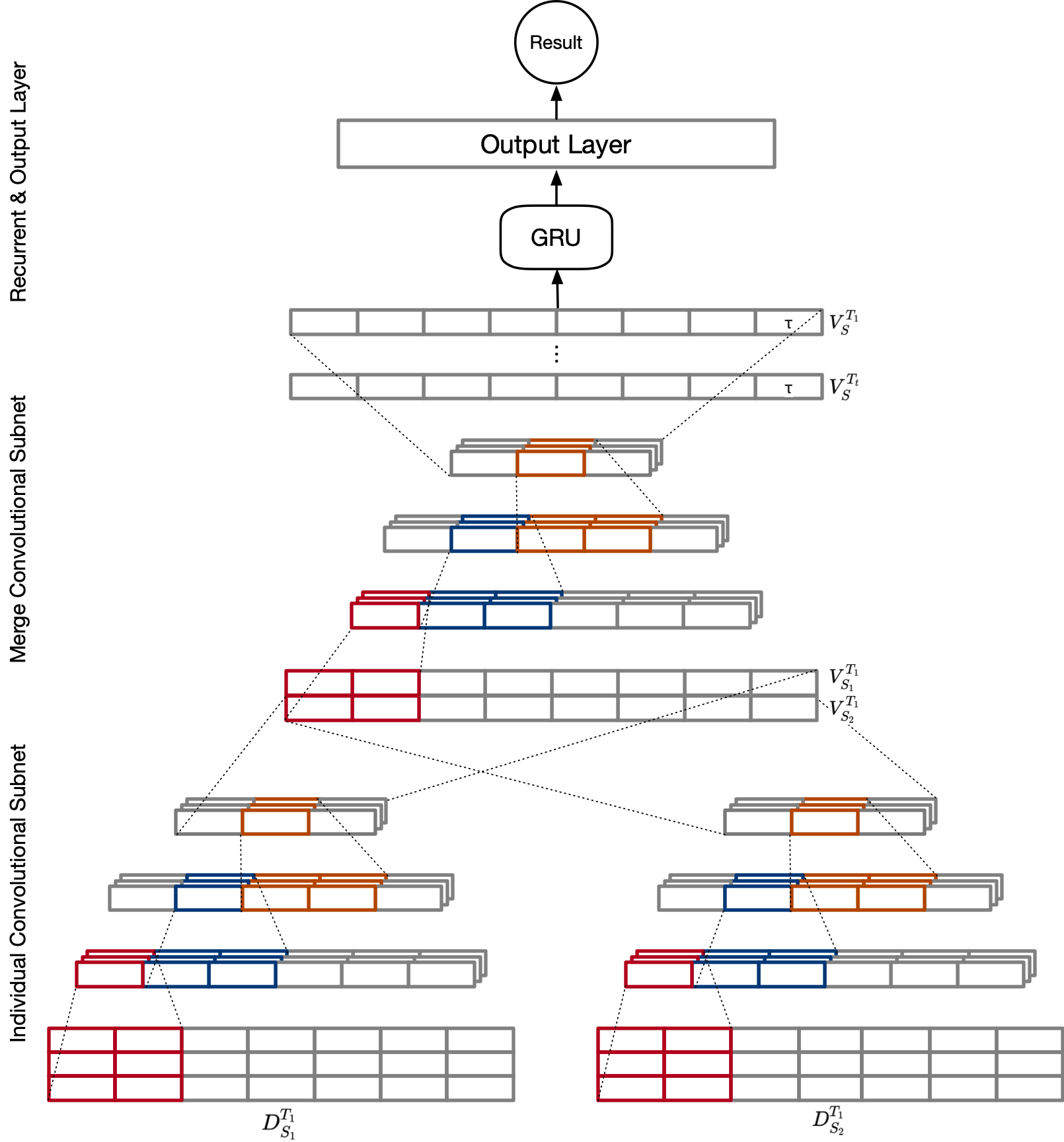

Src DeepSense Architecture

This is an implementation of DeepSense Neural Network for road surface recognition. The architecture comprises an individual convolutional subnet for each sensor, a merged convolutional subnet, recurrent layers, and final output layers based on specific tasks. However, since the approach uses only smartphones' accelerometers, only one individual subnet is directly connected to the merged subnet.