Working with Raster data

Data Structures Recap: Raster and Vector

The two primary types of geospatial data are raster and vector data:

Vector data structures represent specific features on the Earth’s surface, and assign attributes to those features.

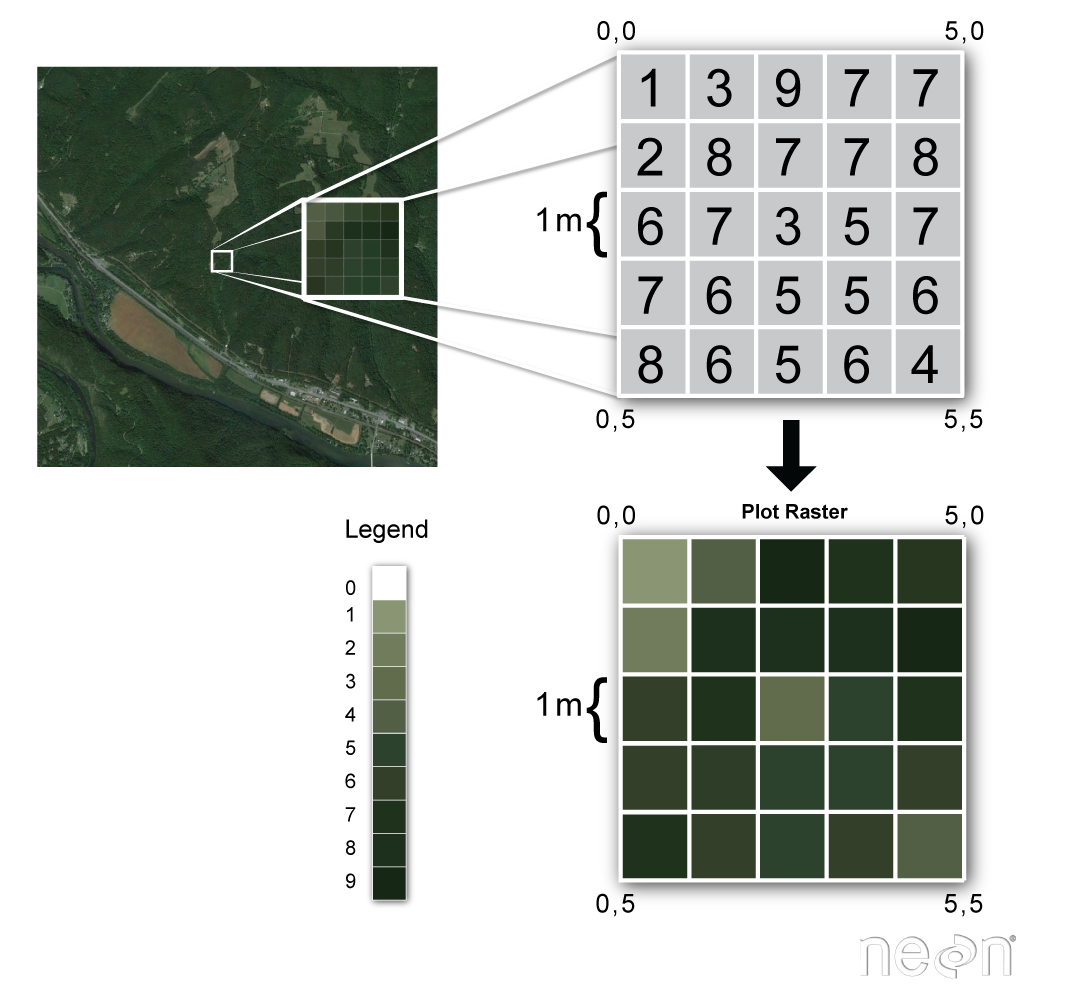

Raster data is stored as a grid of values which are rendered on a map as pixels. Each pixel value represents an area on the Earth’s surface.

Raster data is any pixelated (or gridded) data where each pixel is associated with a specific geographical location. The value of a pixel can be continuous (e.g. elevation) or categorical (e.g. land use). If this sounds familiar, it is because this data structure is very common: it’s how we represent any digital image. A geospatial raster is only different from a digital photo in that it is accompanied by spatial information that connects the data to a particular location. This includes the raster’s extent and cell size, the number of rows and columns, and its coordinate reference system (or CRS).

Source: National Ecological Observatory Network (NEON)

Common properties of any raster:

number of rows and columns (sometimes referred to as lines and samples)

data type (dtype, or bit depth) - e.g., 8-bit (2^8 possible values, 0-255)

some kind of resolution information, often dots per inch (dpi) with raster graphics

A geospatial raster is only different from a digital photo in that it is accompanied by metadata that connects the grid to a particular location.

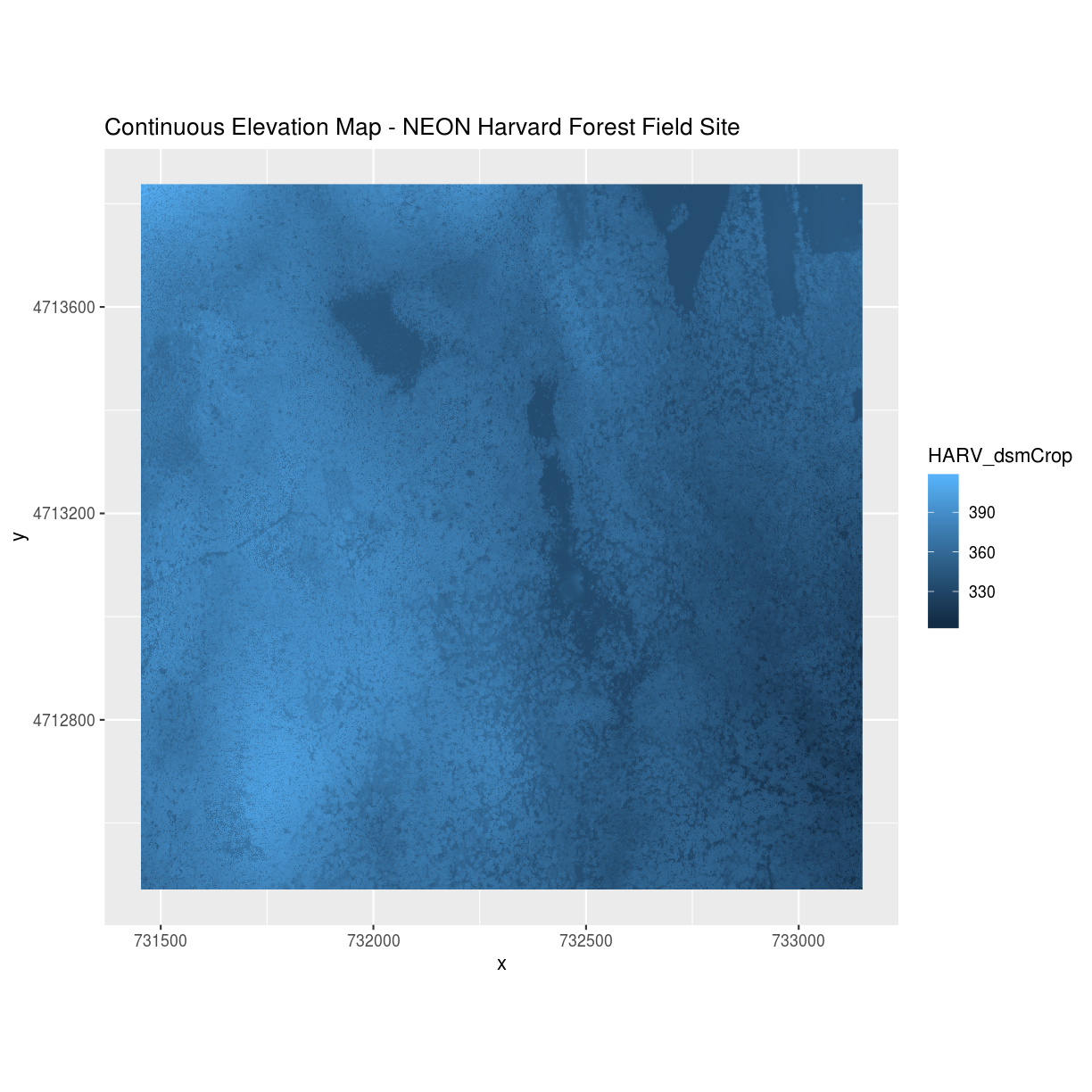

Examples of continuous rasters

2. Digital Elevation Models (DEMs) such as ASTER GDEM 1. Satellite imagery such as those acquired by Landsat or MODIS 3. Maps of canopy height derived from LiDAR data.

A map of elevation for Harvard Forest derived from the NEON AOP LiDAR sensor is below. Elevation is represented as continuous numeric variable in this map. The legend shows the continuous range of values in the data from around 300 to 420 meters.

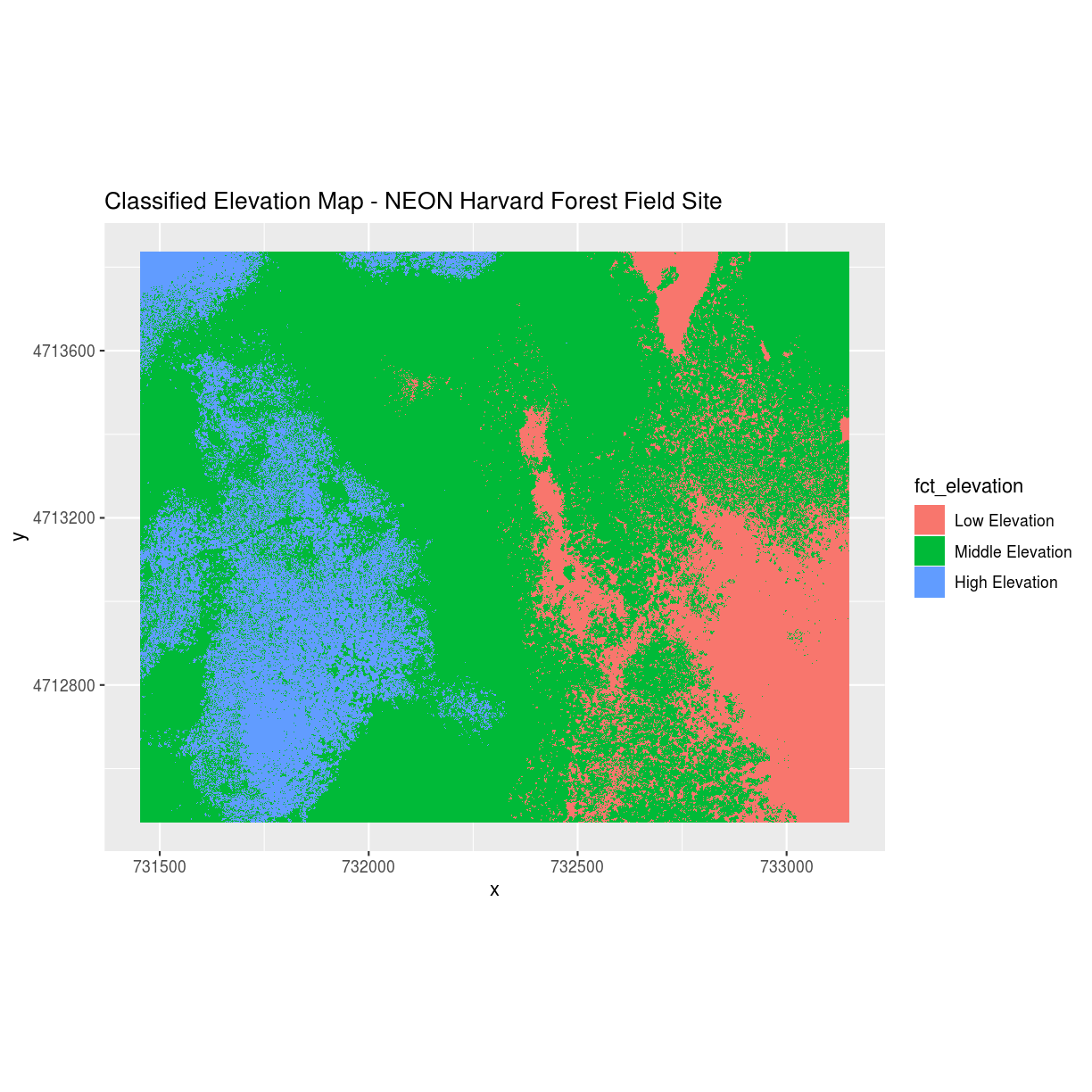

Examples of categorical rasters

Some rasters contain categorical data where each pixel represents a discrete class such as a landcover type (e.g., “coniferous forest” or “grassland”) rather than a continuous value such as elevation or temperature. Some examples of classified maps include:

Landcover / land-use maps

Snowcover masks (binary snow or no snow)

The following map shows elevation data for the NEON Harvard Forest field site. In this map, the elevation data (a continuous variable) has been divided up into categories to yield a categorical raster.

Raster Advantages and Disadvantages

Advantages:

efficient representation of continuous surfaces

cell-by-cell calculations can be very fast and efficient

potentially very high levels of detail

data is ‘unweighted’ across its extent

Disadvantages:

very large file sizes as cell size gets smaller

can be difficult to represent complex information

Measurements are spatially arranged in a regular grid, which may not be an accurate representation of real-world phenomena

Space-filling model assumes that all pixels have value

Changes in resolution can drastically change the meaning of values in a dataset

What makes a raster geospatial?

A raster is just an image in local pixel coordinates until we specify what part of the earth the image covers. This is done through two fundamental pieces of metadata that accompany the pixel values of the image:

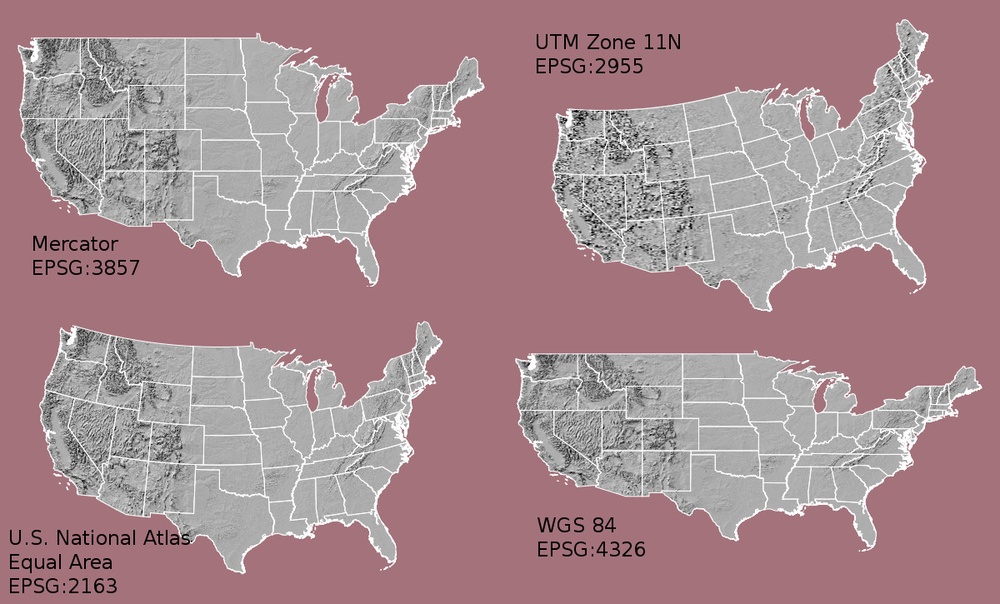

Coordinate Reference System or “CRS”

This specifies the datum, projection, and additional parameters needed to place the raster in geographic space, see lesson 2 crs and projections.

Geotransformation

This is the essential matrix that relates the raster pixel coordinates (rows, columns) to the geographic coordiantes (x and y defined by the CRS). This is typically a 6-parameter transformation that defines the origin, pixel size and rotation of the raster in the geographic coordinate system:

Xgeo = GT(0) + Xpixel*GT(1) + Yline*GT(2)

Ygeo = GT(3) + Xpixel*GT(4) + Yline*GT(5)

Defining this for the whole image allows the image’s pixels to be referenced by a local array index rather than global coordinates, and answers questions such as:

How much area does a given pixel cover?

Given the CRS, what is the origin?

In what direction does the raster “grow” as pixel indices increase?

Extent / BBOX

The affine transformation specifies an “extent” or “bounding box”, which is defined by the minimum and maximum x and y coordinates of the data.

Resolution / Posting

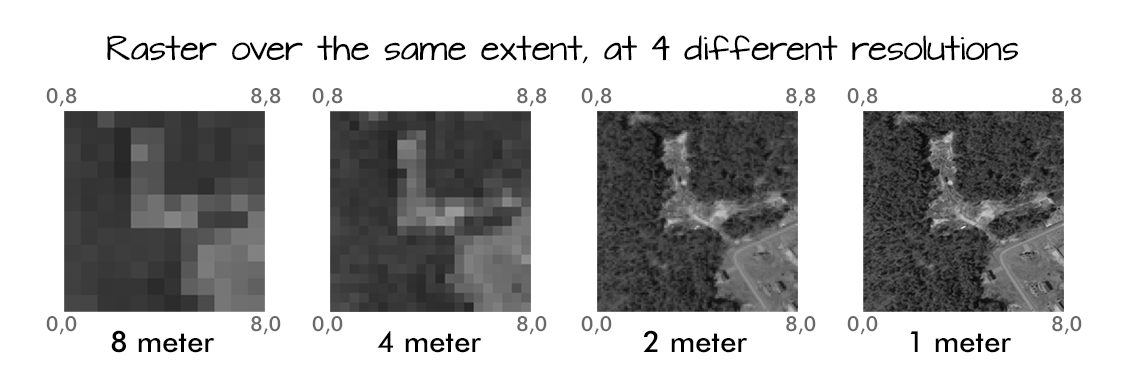

The affine transformation specifies a pixel size of the area on the ground that each pixel of the raster covers. This is often refered to as “resolution”, but because images are often resampled to different resolutions it is also refered to as “posting” or “ground sample distance (gsd)”. Ultimately, “resolution” refers to a sensors ability to distinguish different objects on the ground. The image below illustrates the effect of changes in resolution:

Source: National Ecological Observatory Network (NEON)

More resources:

Multi-band Raster Data

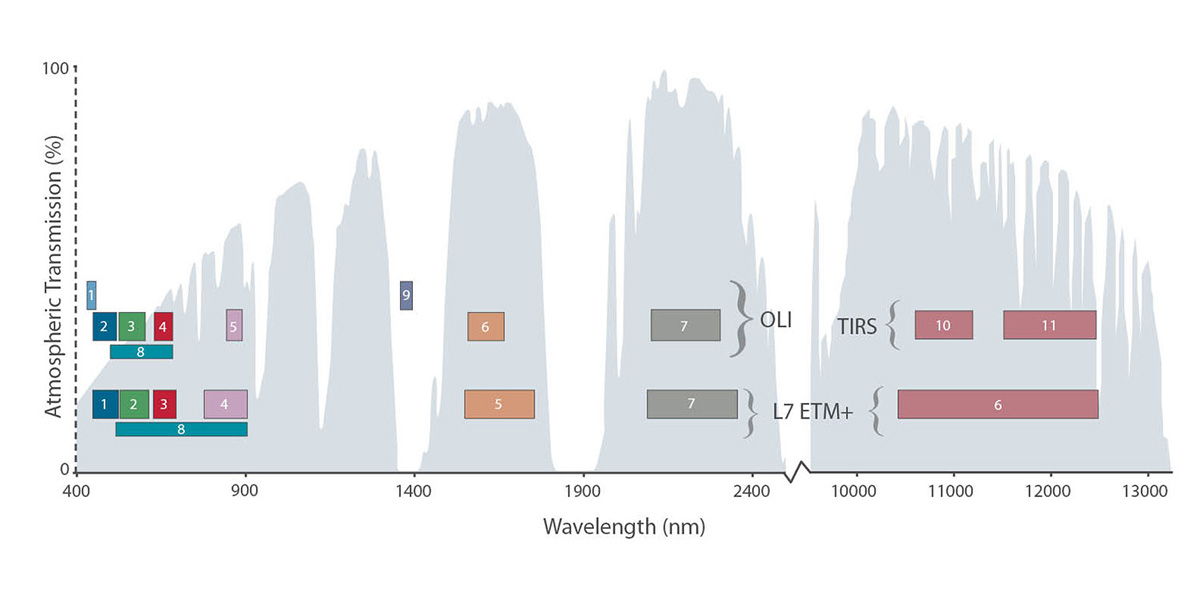

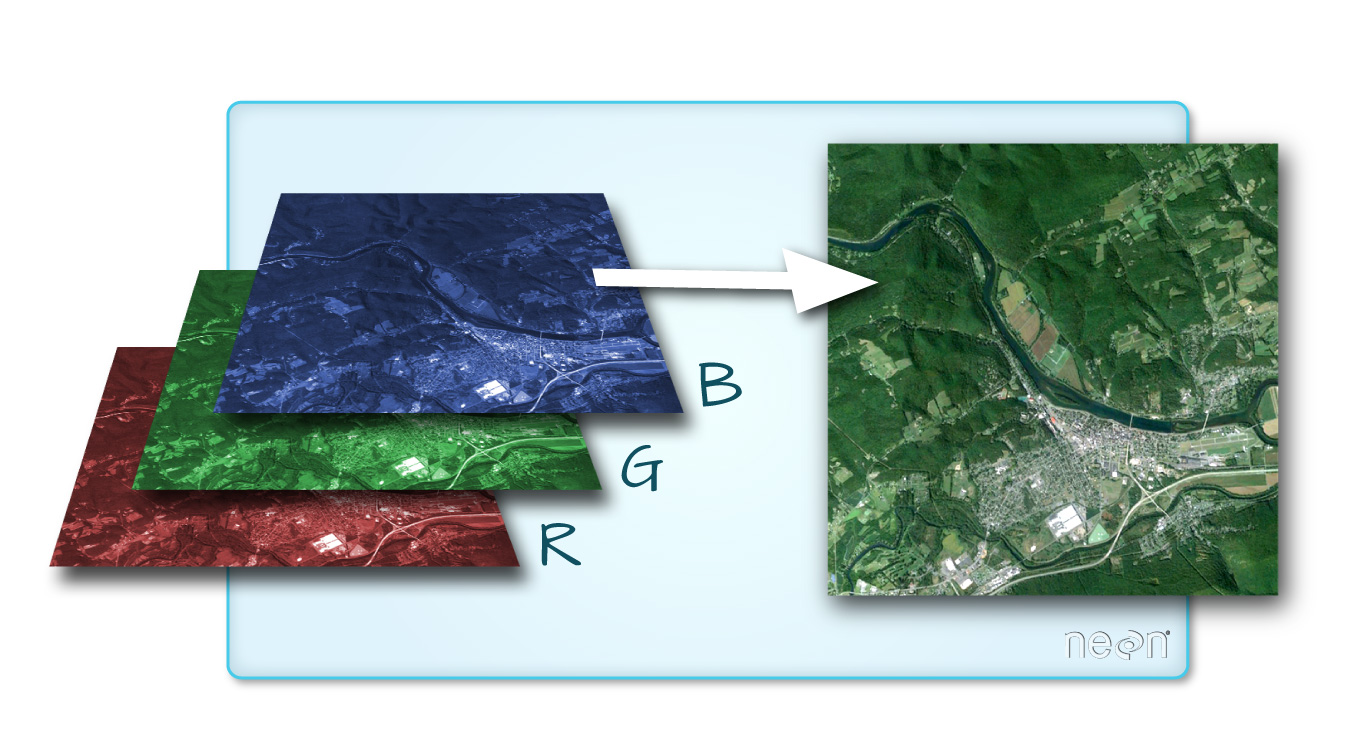

A raster can contain one or more bands. In a multi-band dataset, the rasters will always have the same extent, resolution, and CRS. Each band represents light reflected (or emmitted) from the different portions of the electromagnetic spectrum. The pixel brightness for each band, when composited creates the colors that we see in an image.

Source: L.Rocchio & J.Barsi

One type of multi-band raster dataset that is familiar to many of us is a color image. A basic color image consists of three bands: red, green, and blue.

Source: National Ecological Observatory Network (NEON).

Multi-band raster data might also contain:

Time series: the same variable, over the same area, over time

Multi or hyperspectral imagery: image rasters that have 4 or more (multi-spectral) or more than 10-15 (hyperspectral) bands

Key points

Raster data is pixelated data stored as a matrix

Raster images are often packaged as multidimensional arrays - containing for example RGB and other spectral bands

Geospatial raster data always has a coordinate reference system (CRS) and geotransform that maps image coordinates to locations on Earth’s surface

People often refer to “resolution” as the ground pixel size of a raster, but more specifically it refers to the sensor’s ability to disabiguate adjacent objects on the ground

Exploring and visualisation of raster data

Rasterio is a very useful module for raster processing which you can use for reading and writing several different raster formats in Python. Rasterio is based on GDAL and Python automatically registers all known GDAL drivers for reading supported formats when importing the module. Most common file formats include for example TIFF and GeoTIFF, ASCII Grid and Erdas Imagine .img -files.

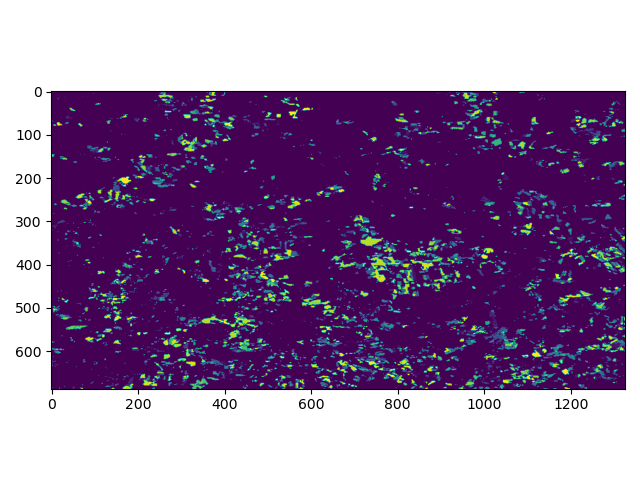

Download example tif raster file forest_loss_porijogi_wgs84.tif

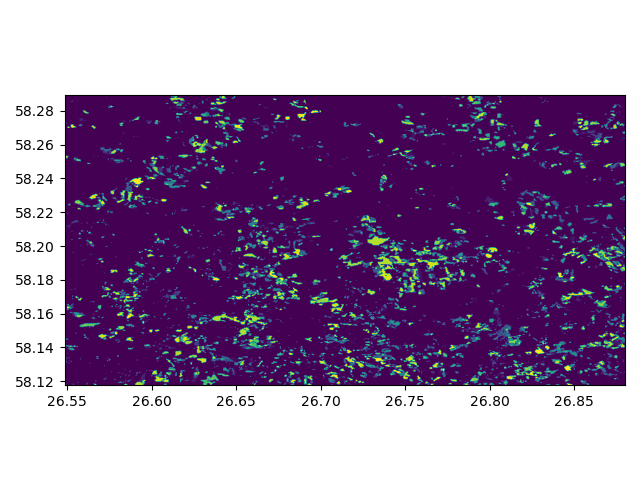

This is a categorical raster containing one band, which is representing the measured (by satellite) forest loss of the years 2001-2018. Each raster cell should have a value, from 0 to 18, representing 0: no forest loss, or one of the numbers 1-18, indicating forest less in this cell in the year 20xx.

Let’s open the dataset:

In [1]: import rasterio

In [2]: dataset = rasterio.open('source/_static/data/L5/forest_loss_porijogi_wgs84.tif')

The dataset handle in rasterio provides many useful informative attributes:

In [3]: print(dataset.name)

source/_static/data/L5/forest_loss_porijogi_wgs84.tif

In [4]: print(dataset.mode)

r

In [5]: print(dataset.count)

1

In [6]: print(dataset.width)

1326

In [7]: print(dataset.height)

687

In [8]: print(dataset.crs)

EPSG:4326

In [9]: print(dataset.bounds)

BoundingBox(left=26.548502689, bottom=58.117685726, right=26.880002689, top=58.289435726)

Another interesting, slightly more complex, attribute is the profile. Where we can quickly see a variety of important aspects.

In [10]: print(dataset.profile)

{'driver': 'GTiff', 'dtype': 'int16', 'nodata': 128.0, 'width': 1326, 'height': 687, 'count': 1, 'crs': CRS.from_epsg(4326), 'transform': Affine(0.0002500000000000014, 0.0, 26.548502689,

0.0, -0.0002500000000000043, 58.289435726), 'tiled': False, 'compress': 'lzw', 'interleave': 'band'}

As explained above in the introduction, this is the Geotransformation / Geotransform:

In [11]: print(dataset.transform)

| 0.00, 0.00, 26.55|

| 0.00,-0.00, 58.29|

| 0.00, 0.00, 1.00|

In [12]: for i in range(len(dataset.indexes) ):

....: print("{}: {}".format(i, dataset.dtypes[i]))

....:

0: int16

Each raster dataset can have several so called bands. Each band in Python and Rasterio is essentially handled as a Numpy array, ndarray. The same computationally efficient data structure that also underlies our Pandas dataframes.

# reading the first band (not from zero!)

In [13]: band1 = dataset.read(1)

In [14]: band1

Out[14]:

array([[ 0, 0, 0, ..., 0, 0, 0],

[ 0, 0, 0, ..., 0, 0, 0],

[ 0, 0, 0, ..., 0, 0, 0],

...,

[ 0, 17, 17, ..., 0, 0, 0],

[ 0, 0, 0, ..., 0, 0, 0],

[ 0, 0, 0, ..., 0, 0, 0]], dtype=int16)

And similarly to other plotting mechanisms, like we use for Pandas or Geopandas, we use matplotlib for the foundational functionality.

Now, as we learned, raster datasets are essentially “just” pixels in a properly organized grid - an image - we can use default plotting from matplotlib and numpy to plot a basic 2D image.

In [15]: import matplotlib.pyplot as plt

# add this in your Jupyter Notebook too

# %matplotlib inline

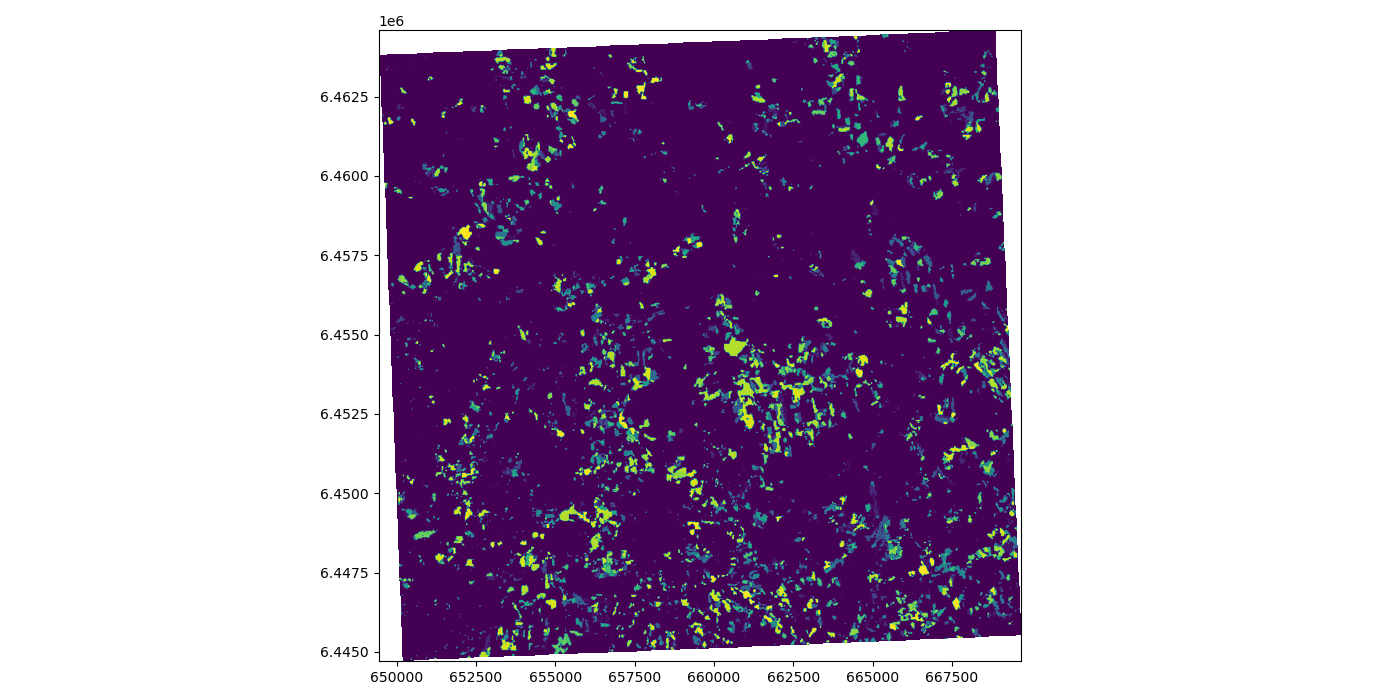

In [16]: plt.imshow(band1)

Out[16]: <matplotlib.image.AxesImage at 0x7f71f6aa2200>

In [17]: plt.tight_layout()

For convenience, Rasterio provides its own slightly advancd plot method, called show(), where we can either add the whole dataset or specific bands.

In order to retain the geotransform logic when plotting a rasterio image, it is of advantage to use the tuple-based nomenclature:

In [18]: from rasterio.plot import show

# band1 is just a numpy ndarray

# show(band1)

# a tuple -> (dataset, 1) indicating the first band in the raster dataset

# show((dataset, 1))

# the whole dataset, eg. RGB bands making up the normal colorspctrum

In [19]: show(dataset)

Out[19]: <AxesSubplot:>

In [20]: plt.tight_layout()

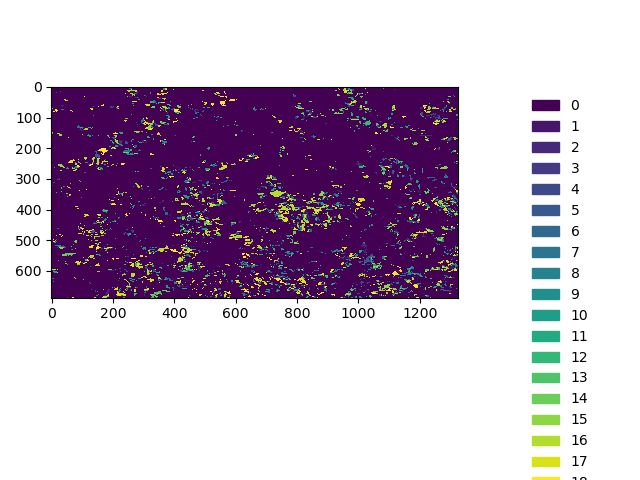

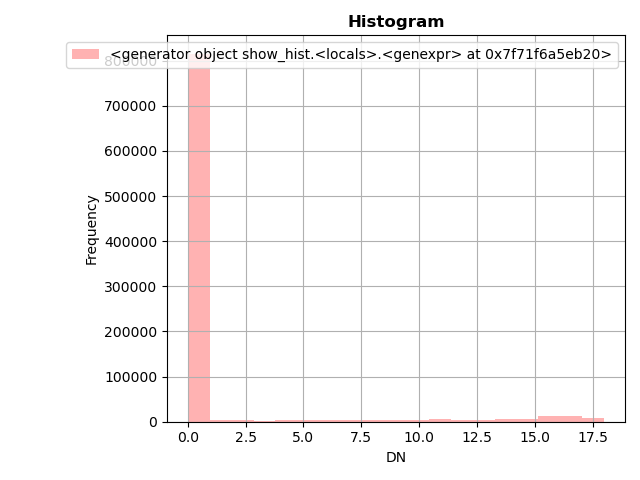

Let’s dig deeper into our categorical raster. We use numpy’s unique function to extract all occurring unique values (in our case: the year-based classes). And then we manually calculate the histgram with numpy, too:

In [21]: import numpy as np

# get classes

In [22]: uniq_vals = np.unique(band1)

# display sorted order

In [23]: print(sorted(uniq_vals))

[0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18]

# Patches = the matplotlib objects drawn

In [24]: counts, bins = np.histogram(band1, bins=18)

# Print histogram outputs

In [25]: for i in range(len(bins)-1):

....: print("bin lower bound:", bins[i])

....: print("counts:", counts[i])

....:

bin lower bound: 0.0

counts: 815440

bin lower bound: 1.0

counts: 4269

bin lower bound: 2.0

counts: 4179

bin lower bound: 3.0

counts: 1076

bin lower bound: 4.0

counts: 3273

bin lower bound: 5.0

counts: 4023

bin lower bound: 6.0

counts: 4418

bin lower bound: 7.0

counts: 3327

bin lower bound: 8.0

counts: 4611

bin lower bound: 9.0

counts: 3576

bin lower bound: 10.0

counts: 3983

bin lower bound: 11.0

counts: 5469

bin lower bound: 12.0

counts: 3706

bin lower bound: 13.0

counts: 2764

bin lower bound: 14.0

counts: 5237

bin lower bound: 15.0

counts: 6990

bin lower bound: 16.0

counts: 13465

bin lower bound: 17.0

counts: 21156

Now, a short side note on adding a custom legend to our plot. Sometimes, we can’t get the legend information from the image, so provide it directly. We use the “viridis” colormap as a base, which is a colorband with 256 colors forming the spectrum of this colormap. We then evenly take color definition elements out of that colormap and assign them to our classes. The even steps are derived from the number of values in our raster dataset, i.e. in this particular nump ndarray.

In [26]: from matplotlib.patches import Patch

In [27]: from matplotlib.colors import BoundaryNorm

In [28]: from matplotlib import rcParams, cycler

In [29]: fig, ax = plt.subplots()

In [30]: cmap = plt.cm.viridis

In [31]: lst = [int(x) for x in np.linspace(0,255,19)]

In [32]: legend_patches = [Patch(color=icolor, label=label) for icolor, label in zip( cmap(lst), sorted(uniq_vals))]

In [33]: ax.legend(handles=legend_patches, facecolor="white", edgecolor="white", bbox_to_anchor=(1.35, 1))

Out[33]: <matplotlib.legend.Legend at 0x7f71f6ae0fd0>

In [34]: plt.imshow(band1, cmap=cmap, interpolation='nearest')

Out[34]: <matplotlib.image.AxesImage at 0x7f71f6aa11b0>

In [35]: plt.tight_layout()

It also always advisable to look at the distribution of values visually, like a histogram plot:

In [36]: from rasterio.plot import show_hist

In [37]: show_hist(dataset, bins=19, lw=0.0, stacked=False, alpha=0.3, histtype='stepfilled', title="Histogram")

In [38]: plt.tight_layout()

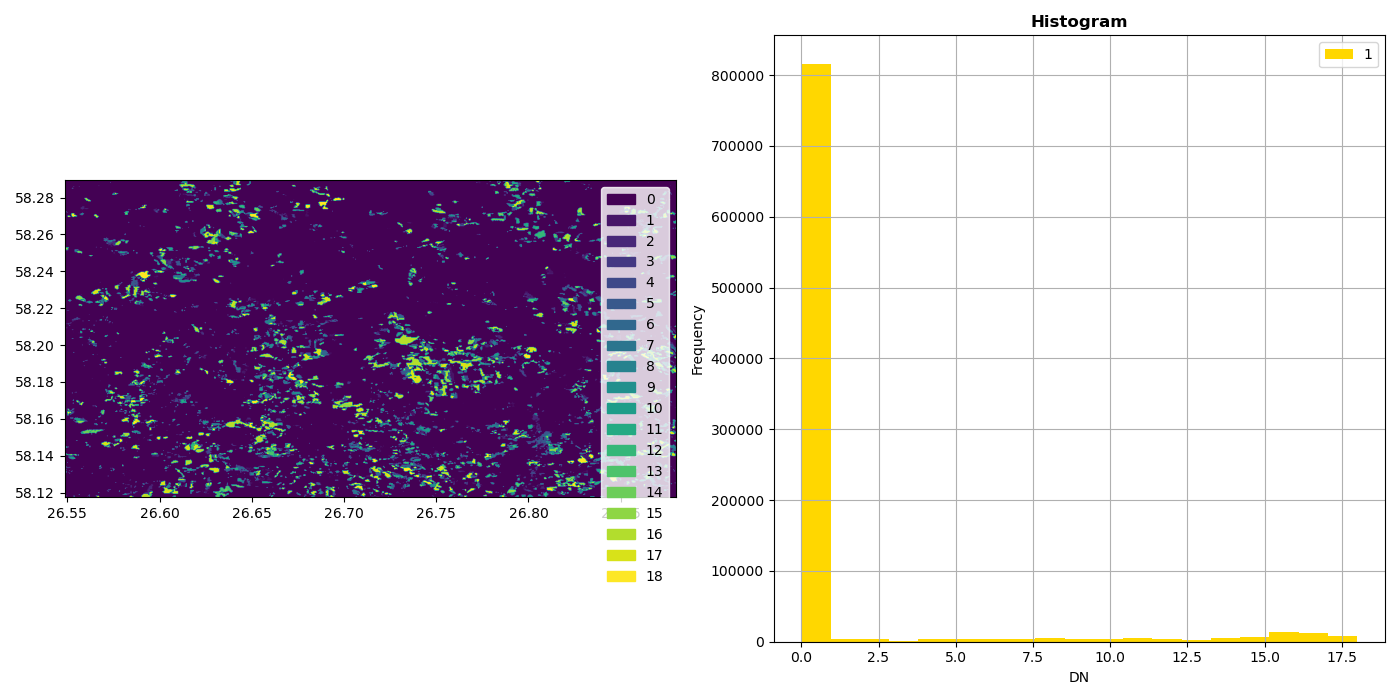

With matplotlib it is also easy to build multi-figure plots:

In [39]: fig, (ax_dat, ax_hist) = plt.subplots(1, 2, figsize=(14,7))

In [40]: ax_dat.legend(handles=legend_patches, facecolor="white", edgecolor="white")

Out[40]: <matplotlib.legend.Legend at 0x7f71f699a500>

In [41]: show((dataset, 1), ax=ax_dat)

Out[41]: <AxesSubplot:>

In [42]: show_hist((dataset, 1), bins=19, ax=ax_hist)

In [43]: plt.show()

In [44]: plt.tight_layout()

Reproject a raster

As we learned before, in order to perform meaning spatial operations and analyses, we need to make sure that our spatial datasets are in the same coordinate reference system. In addition, if we want to make assumptions about distances or areas we should consider using a projected coordinate reference system that has its units of measurement defined in e.g. metres. This is the same for raster data, where each pixel represents a certain area. Typically, all pixels are assumed to represent the same size.

In order to reproject a raster, we have to get a bit more involved. But before we get in-depth, a short side-note on Python resources handling. When files are opened, they are controlled by the Python process through a so called filehandle. If these filehandles are not closed properly, it can cause problems, such as data not been written to the harddisk, several filehandles looking at the same file causing undefind behaviour, or general resource-shortage, which becomes a problem, when many thousands of files are opened but not closed.

In Python we can open a file and work with it like so:

fh = open("data.csv", "rw"):

for lines in fh.readlines():

do_something()

fh.write("new data,1,2,3\n")

# fh.flush()

fh.close()

fh represents the filehandle. Once it is open, it will be around for the lifetime of your program or Python session. If you use it later again, you should make sure, that your data is always written properly, e.g. with the `` flush()`` method.

Python provides also a more “sophisticated” way, in the form similar to the “for” comprehensions that we use to go over lists, etc. Here Python manages not the iterating for us, but the opening and closing of the file.

Everything in the with block below is safe, and once the block is completed, the flush and close are managed by Python and the file is safely and reliably written and closed. We will see this pattern today occasionally.

with open("data.csv") as fh:

fh.read()

fh.write()

# done, no flush or close necessary

Now let’s reproject the forest loss example tif from WGS84 to the Estonian grid 1997, aka EPSG:3301. FYI, We are still using the same example tif raster file forest_loss_porijogi_wgs84.tif.

In [45]: import numpy as np

In [46]: import rasterio

In [47]: from rasterio.warp import calculate_default_transform, reproject, Resampling

In [48]: dst_crs = 'EPSG:3301'

In [49]: with rasterio.open('source/_static/data/L5/forest_loss_porijogi_wgs84.tif') as src:

....: transform, width, height = calculate_default_transform(src.crs, dst_crs, src.width, src.height, *src.bounds)

....: kwargs = src.meta.copy()

....: kwargs.update({

....: 'crs': dst_crs,

....: 'transform': transform,

....: 'width': width,

....: 'height': height

....: })

....: with rasterio.open('source/_static/data/L5/forest_loss_porijogi_3301.tif', 'w', **kwargs) as dst:

....: for i in range(1, src.count + 1):

....: reproject(

....: source=rasterio.band(src, i),

....: destination=rasterio.band(dst, i),

....: src_transform=src.transform,

....: src_crs=src.crs,

....: dst_transform=transform,

....: dst_crs=dst_crs,

....: resampling=Resampling.nearest)

....:

A lot of stuff happened here:

We do the imports and then we define our target CRS. No surprises here. Then we open a first

withblock to open our source dataset.we calculate a standard transformation (the pre-calculation so to say)

calculate_default_transformwith the information from source dataset and the taret CRS.We copy the metadata from the original source dataset into a dictionary object

kwargs(keyword arguments, just a structure with additional parameters and values)We update some important information in the kwargs object, in particular the newly calulated values for transform and CRS

We are still within the first

withblock for reading the source, and now open a nested newwithblock for writing the projected data to file.For each band then we do the actual

reprojectwith the calculated parameters from source to destination.

Let’s open the written file to look at it.

# quickly load and check

with rasterio.open('source/_static/data/L5/forest_loss_porijogi_3301.tif', 'r') as data2:

print(data2.profile)

show(data2, cmap=cmap)

More details: https://rasterio.readthedocs.io/en/stable/topics/reproject.html

Clipping a raster

One common task in raster processing is to clip raster files based on a Polygon. We will reuse the Porijõgi GeoJSON-file from last lecture or download here porijogi_sub_catchments.geojson.

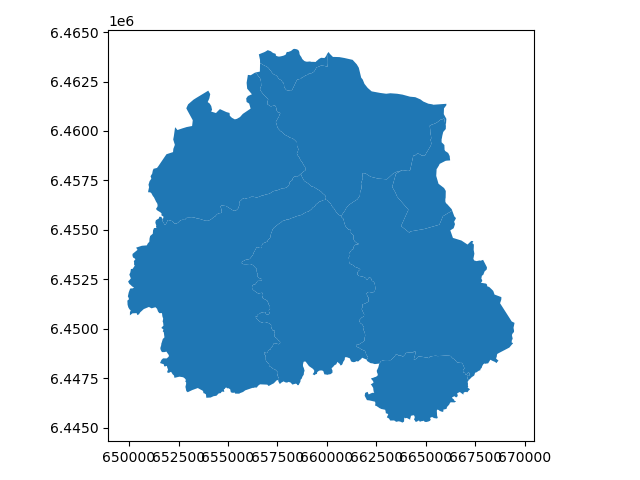

At first let’s juts quickly load and look at the vector dataset.

In [50]: import geopandas as gpd

In [51]: catchments = gpd.read_file('source/_static/data/L5/porijogi_sub_catchments.geojson')

In [52]: print(catchments.crs)

epsg:3301

In [53]: print(catchments.head(5))

OBJECTID NAME_1 AREA_1 Shape_Leng Shape_Area ID geometry

0 8 Idaoja 3823.427995 35446.162219 3.823428e+07 1 MULTIPOLYGON (((660834.858 6455555.914, 660851...

1 9 Keskjooks 5087.809731 42814.174755 5.087810e+07 2 MULTIPOLYGON (((666339.502 6455972.600, 666384...

2 10 Peeda 5634.162684 47792.268153 5.634163e+07 3 MULTIPOLYGON (((659914.002 6456514.131, 659817...

3 11 Sipe 890.280919 16449.028656 8.902809e+06 4 MULTIPOLYGON (((665928.914 6460634.243, 665985...

4 12 Tatra 3306.643841 31108.960376 3.306644e+07 5 MULTIPOLYGON (((658678.470 6457825.152, 658579...

# plot to quickly again to see its geographic layout

In [54]: catchments.plot()

Out[54]: <AxesSubplot:>

In [55]: plt.tight_layout()

In order to use the features, technically only the polygon geometries, for clipping in Rasterio, we need to provide them in a slightly more low-level format.

We remember that the fiona library works actually under the hood of our now well-known Geopandas library. With fiona we can open vector/feature datasets directly without loading them into a dataframe.

Here we extract the “low-level” geometry object with fiona in order to obtain our vector mask.

In [56]: import fiona

In [57]: with fiona.open("source/_static/data/L5/porijogi_sub_catchments.geojson", "r") as vectorfile:

....: shapes = [feature["geometry"] for feature in vectorfile]

....:

With this list shapes tha twe just created we can pass the polygons to the mask function of Rasterio to do the clipping.

The clipping itself is pretty straightfoward. Here we demonstrate the opening of the raster dataset without a with block, so simply opening it and working with the filehandle thereof, and eventually closing it again.

In [58]: from rasterio.mask import mask

# we have to use the newly created reprojected raster file

In [59]: data2 = rasterio.open('source/_static/data/L5/forest_loss_porijogi_3301.tif', 'r')

# Clip the raster with Polygon

In [60]: out_image, out_transform = mask(dataset=data2, shapes=shapes, crop=True)

In [61]: out_meta = data2.meta.copy()

In [62]: data2.close()

The whole work during the clipping process is very similar to the reprojecting workflow. As we change the dimension of the raster data, because we are literally clipping stuff off it, we have to retrieve a new transform together with the raw data. We keep some of the original meta information again, but we update of course dimensions and transform before we write the new dataset to file.

In [63]: print(out_meta)

{'driver': 'GTiff', 'dtype': 'int16', 'nodata': 128.0, 'width': 1107, 'height': 1088, 'count': 1, 'crs': CRS.from_epsg(3301), 'transform': Affine(18.286653237913676, 0.0, 649439.5163138955,

0.0, -18.286653237913676, 6464597.241364005)}

In [64]: out_meta.update({"driver": "GTiff",

....: "height": out_image.shape[1],

....: "width": out_image.shape[2],

....: "transform": out_transform})

....:

In [65]: with rasterio.open("source/_static/data/L5/forest_loss_clipped.tif", "w", **out_meta) as dest:

....: dest.write(out_image)

....:

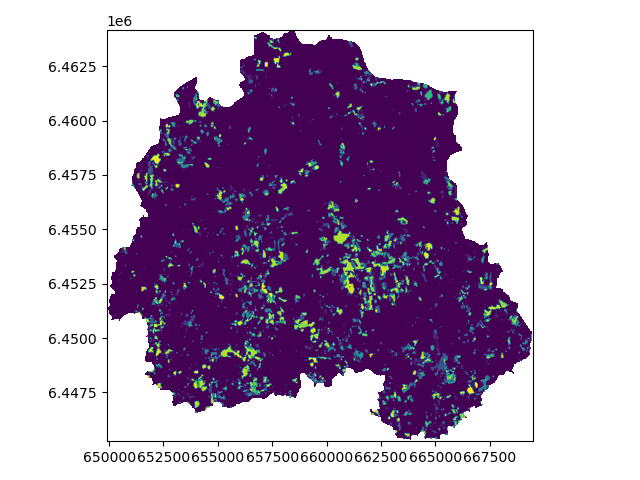

And let’s load the newly created clipped raster:

# quickly load and check

with rasterio.open('source/_static/data/L5/forest_loss_clipped.tif.tif', 'r') as data3:

print(data3.profile)

show(data3, cmap=cmap)

More details: https://rasterio.readthedocs.io/en/stable/topics/masking-by-shapefile.html

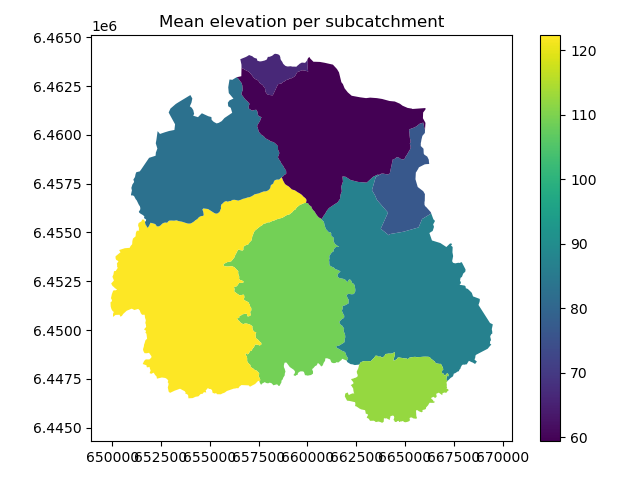

Calculating zonal statistics

Often we want to summarize raster datasets based on vector geometries, such as calculating the average elevation of specific area or aggregating summaries of areas or other count-based statistics per pixel under given polygons. Rasterstats is a Python module that works on top of Rasterio and does exactly that.

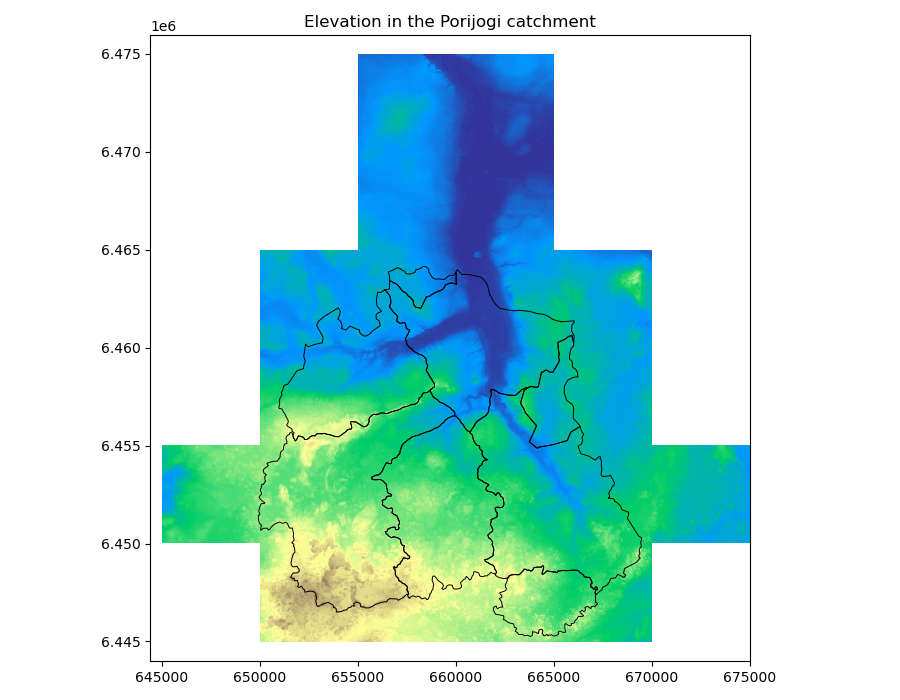

Continuous raster are representing a continuous surface of the distribution of a phenomenon in space - e.g. elevation or temperature. DEMs are continuous rasters and now we want to look at a Digital Elevation Model (DEM) raster file: dem.tif.

We are again reusing the Porijõgi GeoJSON-file from last lecture: porijogi_sub_catchments.geojson. These will be our polygons for which we want to aggregate summary statistics from the DEM raster.

In [66]: import geopandas as gpd

In [67]: catchments = gpd.read_file('source/_static/data/L5/porijogi_sub_catchments.geojson')

Now let’s open the DEM and inspect its attributes:

In [68]: demdata = rasterio.open('source/_static/data/L5/dem.tif')

In [69]: print(demdata.name)

source/_static/data/L5/dem.tif

In [70]: print(demdata.mode)

r

In [71]: print(demdata.count)

1

In [72]: print(demdata.width)

1020

In [73]: print(demdata.height)

1065

In [74]: print(demdata.crs)

EPSG:3301

In [75]: print(demdata.bounds)

BoundingBox(left=644405.5556, bottom=6443988.8889, right=675000.5556, top=6475948.8889)

And we can also plot vector features and raster datasets together:

Again, we make sure we are plotting on top of the same “axes”, we use the Rasterio show function and tell it to plot the the first band in the demdata dataset, and then we plot our Geopandas dataframe on top of it.

We have to be sure of course, that our datasets are in the same spatial coordinate reference system.

In [76]: fig, ax = plt.subplots(1, figsize=(9, 7))

In [77]: show((demdata, 1), cmap='terrain', interpolation='none', ax=ax)

Out[77]: <AxesSubplot:>

In [78]: catchments.plot(ax=ax, facecolor="none", edgecolor='black', lw=0.7)

Out[78]: <AxesSubplot:>

In [79]: plt.title("Elevation in the Porijogi catchment")

Out[79]: Text(0.5, 1.0, 'Elevation in the Porijogi catchment')

In [80]: plt.show()

In [81]: plt.tight_layout()

Importing and calling the zonal statistics function from the Rasterstats module is very easy. However, we have to be aware that we directly refer to the file paths of the datasets, and not to a variable containing a numpy array or dataframe!

We can name the desired statistics via the stats parameter. Check the detailed API which statistics are available.

In [82]: from rasterstats import zonal_stats

In [83]: zs = zonal_stats('source/_static/data/L5/porijogi_sub_catchments.geojson', 'source/_static/data/L5/dem.tif', stats=['mean','std'])

In [84]: print(zs)

[{'mean': 108.81127854956439, 'std': 20.45245360418255}, {'mean': 86.88054631660887, 'std': 16.95209830197268}, {'mean': 122.27791004234241, 'std': 25.821034407200845}, {'mean': 76.41216968715197, 'std': 6.690340937594575}, {'mean': 82.89976042687574, 'std': 23.864544169477856}, {'mean': 66.31877151178183, 'std': 6.77909304322478}, {'mean': 112.26344569806881, 'std': 15.545179792343868}, {'mean': 59.404531339850486, 'std': 16.974837452873903}]

The zs variable is a list of dictionary objects that holds the calculated statistics for each feature/polygon in the same order.

So we have to rely on the order of the features in the source GeoJSON file for the zonal_statistics to be the same as in our Geopandas dataframe.

But because computers we can, and we make this a list into a Pandas dataframe and just concat (join/merge by merely “plucking it onto”) as additional columns.

In [85]: import pandas as pd

In [86]: demstats_df = pd.DataFrame(zs)

In [87]: demstats_df.rename(columns={'mean':'dem_mean','std':'dem_std'}, inplace=True)

In [88]: catchments = pd.concat([catchments, demstats_df], axis=1)

We also rename the columns to be more explicit of the meaning of the calculated variable. Finally, we plot our catchments geodataframe based on the dem_mean column.

The distribution of mean elevation within the catchment makes sense, the outlet is in the North-East.

In [89]: fig, ax = plt.subplots(1, 1)

In [90]: plt.title("Mean elevation per subcatchment")

Out[90]: Text(0.5, 1.0, 'Mean elevation per subcatchment')

In [91]: catchments.plot(column='dem_mean', ax=ax, legend=True)

Out[91]: <AxesSubplot:title={'center':'Mean elevation per subcatchment'}>

In [92]: plt.tight_layout()

Launch in the web/MyBinder: