Data reclassification¶

Reclassifying data based on specific criteria is a common task when doing GIS analysis. The purpose of this lesson is to see how we can reclassify values based on some criteria which can be whatever, such as:

1. if available space in a pub is less than the space in my wardrobe

AND

2. the temperature outside is warmer than my beer

------------------------------------------------------

IF TRUE: ==> I go and drink my beer outside

IF NOT TRUE: ==> I go and enjoy my beer inside at a table

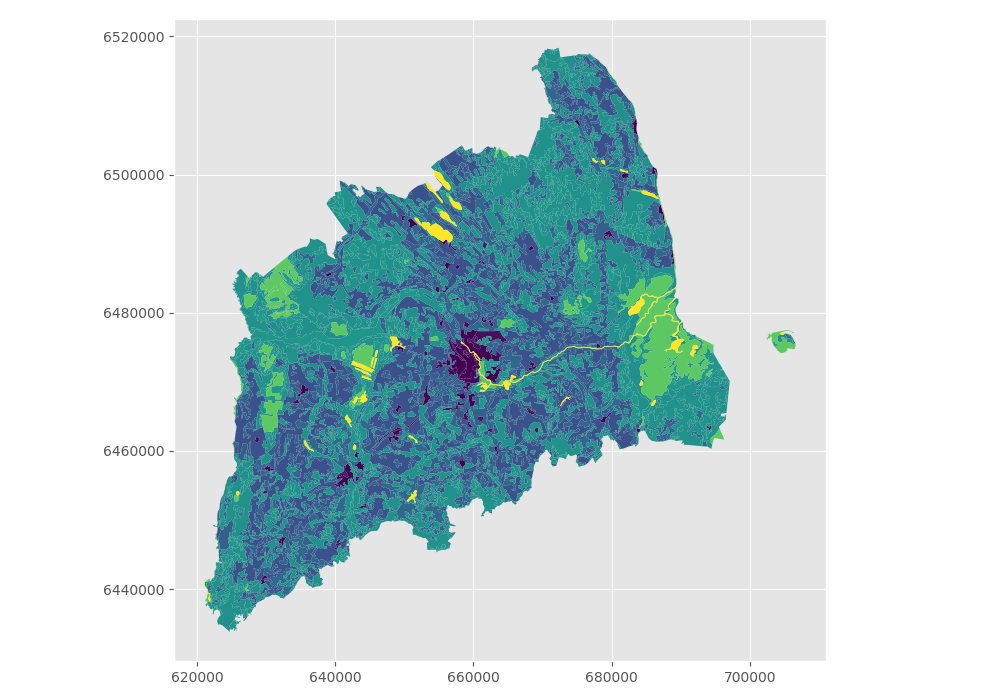

Even though, the above would be an interesting study case, we will use slightly more traditional cases to learn classifications. We will use Corine land cover layer from year 2012, and a Population Matrix data from Estonia to classify some features of them based on our own self-made classifier, or using a ready made classifiers that are commonly used e.g. when doing visualizations.

The target in this part of the lesson is to:

- classify the bogs into big and small bogs where

- a big bog is a bog that is larger than the average size of all bogs in our study region

- a small bog ^ vice versa

- use ready made classifiers from pysal -module to classify municipal into multiple classes.

Download data¶

Download (and then extract) the dataset zip-package used during this lesson from this link.

You should have following Shapefiles in the data folder:

corine_legend/ corine_tartu.shp population_admin_units.prj

corine_tartu.cpg corine_tartu.shp.xml population_admin_units.sbn

corine_tartu.dbf corine_tartu.shx population_admin_units.sbx

corine_tartu.prj L4.zip population_admin_units.shp

corine_tartu.sbn population_admin_units.cpg population_admin_units.shp.xml

corine_tartu.sbx population_admin_units.dbf population_admin_units.shx

Data preparation¶

Before doing any classification, we need to prepare our data a little bit.

Let’s read the data in and have a look at the columnsand plot our data so that we can see how it looks like on a map.

import pandas as pd

import geopandas as gpd

import matplotlib.pyplot as plt

# File path

fp = r"Data\corine_tartu.shp"

data = gpd.read_file(fp)

Let’s see what we have.

In [1]: data.head(5)

Out[1]:

code_12 ... geometry

0 111 ... POLYGON Z ((5290566.200000002 4034511.45 0, 52...

1 112 ... (POLYGON Z ((5259932.400000001 3993825.37 0, 5...

2 112 ... POLYGON Z ((5268691.710000002 3996582.90000000...

3 112 ... POLYGON Z ((5270008.730000001 4001777.44000000...

4 112 ... POLYGON Z ((5278194.410000001 4003869.23000000...

[5 rows x 7 columns]

We see that the Land Use in column “code_12” is numerical and we don’t know right now what that means. So we should at first join the “clc_legend” in order to know what the codes mean:

import pandas as pd

fp_clc = r"Data\corine_legend\clc_legend.csv"

data_legend = pd.read_csv(fp_clc, sep=';', encoding='latin1')

data_legend.head(5)

We could now try to merge / join the two dataframes, ideally by the ‘code_12’ column of “data” and the “CLC_CODE” of “data_legend”.

display(data.dtypes)

display(data_legend.dtypes)

# please don't actually do it right now, it might cause extra troubles later

# data = data.merge(data_legend, how='inner', left_on='code_12', right_on='CLC_CODE')

But if we try, we will receive an error telling us that the columns are of different data type and therefore can’t be used as join-index. So we have to add a column where have the codes in the same type. I am choosing to add a column on “data”, where we transform the String/Text based “code_12” into an integer number.

In [2]: def change_type(row):

...: code_as_int = int(row['code_12'])

...: return code_as_int

...:

In [3]: data['clc_code_int'] = data.apply(change_type, axis=1)

In [4]: data.head(2)

Out[4]:

code_12 ... clc_code_int

0 111 ... 111

1 112 ... 112

[2 rows x 8 columns]

Here we are “casting” the String-based value, which happens to be a number, to be interpreted as an actula numeric data type.

Using the int() function. Pandas (and therefore also Geopandas) also provides an in-built function that provides similar functionality astype() ,

e.g. like so data['code_astype_int'] = data['code_12'].astype('int64', copy=True)

Both versions can go wrong if the String cannot be interpreted as a number, and we should be more defensive (more details later, don’t worry right now).

Now we can merge/join the legend dateframe into our corine landuse dataframe:

In [5]: data = data.merge(data_legend, how='inner', left_on='clc_code_int', right_on='CLC_CODE', suffixes=('', '_legend'))

We have now also added more columns. Let’s drop a few, so we can focus on the data we need.

In [6]: selected_cols = ['ID','Remark','Shape_Area','CLC_CODE','LABEL3','RGB','geometry']

# Select data

In [7]: data = data[selected_cols]

# What are the columns now?

In [8]: data.columns

Out[8]: Index(['ID', 'Remark', 'Shape_Area', 'CLC_CODE', 'LABEL3', 'RGB', 'geometry'], dtype='object')

Before we plot, let’s check the coordinate system.

# Check coordinate system information

In [9]: data.crs

Out[9]:

{'ellps': 'GRS80',

'lat_0': 52,

'lon_0': 10,

'no_defs': True,

'proj': 'laea',

'units': 'm',

'x_0': 4321000,

'y_0': 3210000}

Okey we can see that the units are in meters, but …

… geographers will realise that the Corine dataset is in the ETRS89 / LAEA Europe coordinate system, aka EPSG:3035. Because it is a European dataset it is in the recommended CRS for Europe-wide data. It is a single CRS for all of Europe and predominantly used for statistical mapping at all scales and other purposes where true area representation is required.

However, being in Estonia and only using an Estonian part of the data, we should consider reprojecting it into the Estonian national grid (aka Estonian Coordinate System of 1997 -> EPSG:3301) before we plot or calculate the area of our bogs.

In [10]: data_proj = data.to_crs(epsg=3301)

# Calculate the area of bogs

In [11]: data_proj['area'] = data_proj.area

# What do we have?

In [12]: data_proj['area'].head(2)

Out[12]:

0 514565.037797

1 153.104211

Name: area, dtype: float64

Let’s plot the data and use column ‘CLC_CODE’ as our color.

In [13]: data_proj.plot(column='CLC_CODE', linewidth=0.05)

Out[13]: <matplotlib.axes._subplots.AxesSubplot at 0x150118b5dd8>

# Use tight layout and remove empty whitespace around our map

In [14]: plt.tight_layout()

Let’s see what kind of values we have in ‘code_12’ column.

In [15]: print(list(data_proj['CLC_CODE'].unique()))

[111, 112, 121, 122, 124, 131, 133, 141, 142, 211, 222, 231, 242, 243, 311, 312, 313, 321, 324, 411, 412, 511, 512]

In [16]: print(list(data_proj['LABEL3'].unique()))

��������������������������������������������������������������������������������������������������������������������['Continuous urban fabric', 'Discontinuous urban fabric', 'Industrial or commercial units', 'Road and rail networks and associated land', 'Airports', 'Mineral extraction sites', 'Construction sites', 'Green urban areas', 'Sport and leisure facilities', 'Non-irrigated arable land', 'Fruit trees and berry plantations', 'Pastures', 'Complex cultivation patterns', 'Land principally occupied by agriculture, with significant areas of natural vegetation', 'Broad-leaved forest', 'Coniferous forest', 'Mixed forest', 'Natural grasslands', 'Transitional woodland-shrub', 'Inland marshes', 'Peat bogs', 'Water courses', 'Water bodies']

Okey we have different kind of land covers in our data. Let’s select only bogs from our data. Selecting specific rows from a DataFrame

based on some value(s) is easy to do in Pandas / Geopandas using the indexer called .loc[], read more from here.

# Select bogs (i.e. 'Peat bogs' in the data) and make a proper copy out of our data

In [17]: bogs = data_proj.loc[data['LABEL3'] == 'Peat bogs'].copy()

In [18]: bogs.head(2)

Out[18]:

ID ... area

2214 EU-2056784 ... 4.771837e+05

2215 EU-2056806 ... 8.916207e+06

[2 rows x 8 columns]

Calculations in DataFrames¶

Okey now we have our bogs dataset ready. The aim was to classify those bogs into small and big bogs based on the average size of all bogs in our study area. Thus, we need to calculate the average size of our bogs.

We remember also that the CRS was projected with units in metre, and the calculated values are therefore be in square meters. Let’s change those into square kilometers so they are easier to read. Doing calculations in Pandas / Geopandas are easy to do:

In [19]: bogs['area_km2'] = bogs['area'] / 1000000

# What is the mean size of our bogs?

In [20]: l_mean_size = bogs['area_km2'].mean()

In [21]: l_mean_size

Out[21]: 2.1555886794441346

Okey so the size of our bogs seem to be approximately 2.15 square kilometers.

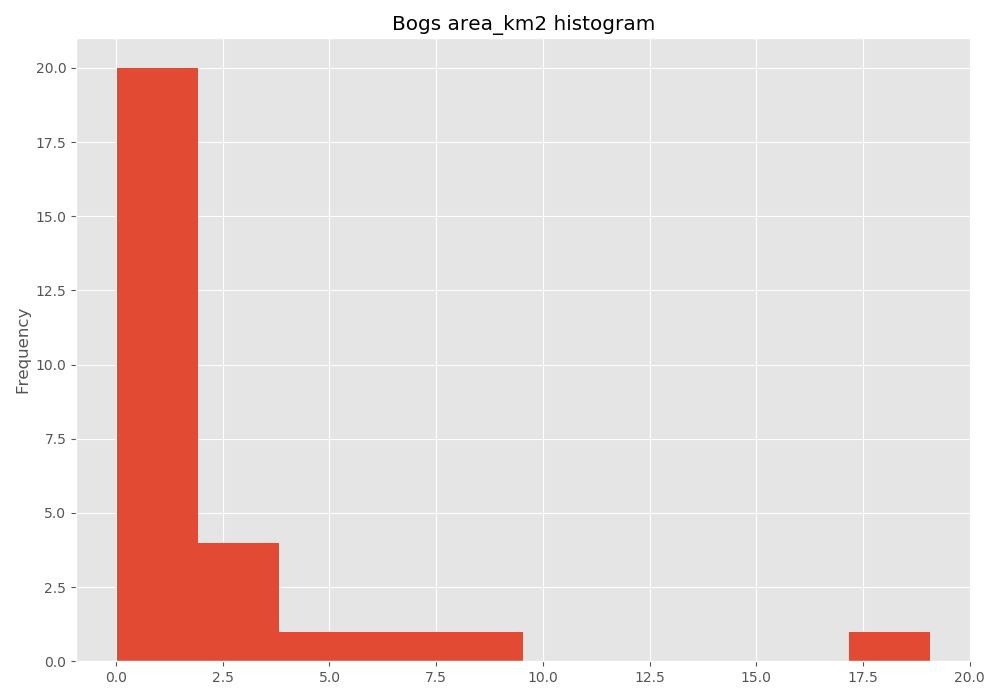

But to understand the overall distribution of the different sizes of the bogs, we can use the histogram. A histogram shows how the numerical values of a datasets are distributed within the overall data. It shows the frequency of values (how many single “features”) are within each “bin”.

# Plot

In [22]: fig, ax = plt.subplots()

In [23]: bogs['area_km2'].plot.hist(bins=10);

# Add title

In [24]: plt.title("Bogs area_km2 histogram")

Out[24]: Text(0.5, 1.0, 'Bogs area_km2 histogram')

In [25]: plt.tight_layout()

Note

It is also easy to calculate e.g. sum or difference between two or more layers (plus all other mathematical operations), e.g.:

# Sum two columns

data['sum_of_columns'] = data['col_1'] + data['col_2']

# Calculate the difference of three columns

data['difference'] = data['some_column'] - data['col_1'] + data['col_2']

Classifying data¶

Creating a custom classifier¶

Let’s create a function where we classify the geometries into two classes based on a given threshold -parameter.

If the area of a polygon is lower than the threshold value (average size of the bog), the output column will get a value 0,

if it is larger, it will get a value 1. This kind of classification is often called a binary classification.

First we need to create a function for our classification task. This function takes a single row of the GeoDataFrame as input, plus few other parameters that we can use.

def binaryClassifier(row, source_col, output_col, threshold):

# If area of input geometry is lower that the threshold value

if row[source_col] < threshold:

# Update the output column with value 0

row[output_col] = 0

# If area of input geometry is higher than the threshold value update with value 1

else:

row[output_col] = 1

# Return the updated row

return row

Let’s create an empty column for our classification

In [26]: bogs['small_big'] = None

We can use our custom function by using a Pandas / Geopandas function called .apply().

Thus, let’s apply our function and do the classification.

In [27]: bogs = bogs.apply(binaryClassifier, source_col='area_km2', output_col='small_big', threshold=l_mean_size, axis=1)

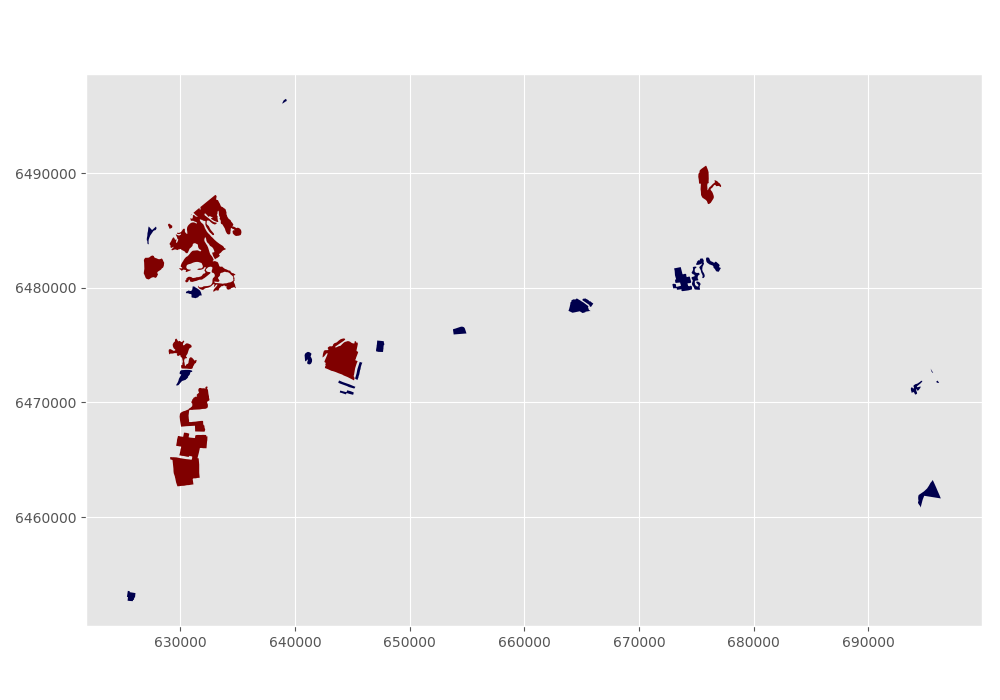

Let’s plot these bogs and see how they look like.

In [28]: bogs.plot(column='small_big', linewidth=0.05, cmap="seismic")

Out[28]: <matplotlib.axes._subplots.AxesSubplot at 0x1501e132c18>

In [29]: plt.tight_layout()

Okey so it looks like they are correctly classified, good. As a final step let’s save the bogs as a file to disk.

outfp_bogs = r"Data\bogs.shp"

bogs.to_file(outfp_bogs)

Note

There is also a way of doing this without a function but with the previous example might be easier to understand how the function works. Doing more complicated set of criteria should definitely be done in a function as it is much more human readable.

Let’s give a value 0 for small bogs and value 1 for big bogs by using an alternative technique:

bogs['small_big_alt'] = None

bogs.loc[bogs['area_km2'] < l_mean_size, 'small_big_alt'] = 0

bogs.loc[bogs['area_km2'] >= l_mean_size, 'small_big_alt'] = 1

Todo

Task:

Try to change your classification criteria and see how your results change! Change the LandUse Code/Label and see how they change the results.

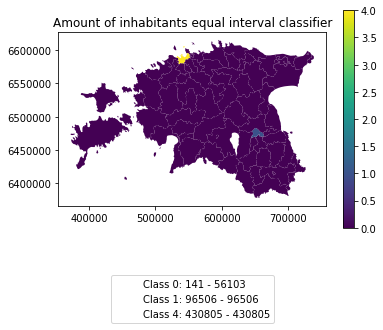

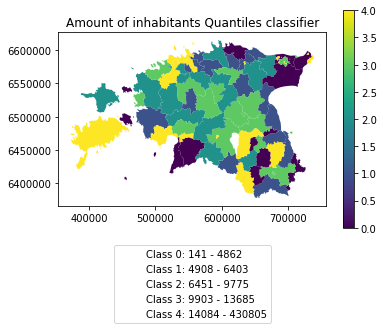

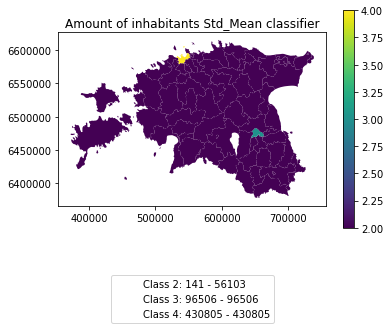

Classification based on common classification schemes¶

Pysal -module is an extensive Python library including various functions and tools to do spatial data analysis. It also includes all of the most common data classification schemes that are used commonly e.g. when visualizing data. Available map classification schemes in pysal -module are (see here for more details):

- Box_Plot

- Equal_Interval

- Fisher_Jenks

- Fisher_Jenks_Sampled

- HeadTail_Breaks

- Jenks_Caspall

- Jenks_Caspall_Forced

- Jenks_Caspall_Sampled

- Max_P_Classifier

- Maximum_Breaks

- Natural_Breaks

- Quantiles

- Percentiles

- Std_Mean

- User_Defined

For this we will use the Adminstrative Units dataset for population. It is in the Estonian “vald” level, which compares to the level at municipality. It has the following fields:

- VID, an Id for the “vald”

- KOOD, a unique code for the Statistics Board

- NIMI, the name of the municipality

- population, the population, number of people living

- geometry, the polygon for the municpality district border

Let’s apply one of those schemes into our data and classify the population into 5 classes.

Choosing Number of Classes – if you choose too many classes then it requires the map reader to remember too much when viewing the map and it may also make the differentiation of class colors difficult for the map reader. On the other hand, if you choose too few classes, it oversimplifies the data possibly hiding important patterns. Additionally, each class may group dissimilar items together which is in direct opposition of one of the main goals of classification. Typically in cartography three to seven classes are preferred and five is the most common and optimal for most thematic maps.

import geopandas as gpd

import matplotlib.pyplot as plt

# File path

fp = r"Data\population_admin_units.shp"

acc = gpd.read_file(fp)

In [30]: import pysal as ps

# Define the number of classes

In [31]: n_classes = 5

The classifier needs to be initialized first with make() function that takes the number of desired classes as input parameter.

# Create a Natural Breaks classifier

In [32]: classifier = ps.Natural_Breaks.make(k=n_classes)

Now we can apply that classifier into our data quite similarly as in our previous examples. But we will run into the “numbers as text problem” again.

# data types in the population dataset

In [33]: acc.dtypes

Out[33]:

VID float64

KOOD object

NIMI object

population object

geometry object

dtype: object

Therefore, we have to change the column type for population into a numerical data type first:

In [34]: import numpy as np

In [35]: def change_type_defensively(row):

....: try:

....: return int(row['population'])

....: except Exception:

....: return np.nan

....: acc['population_int'] = acc.apply(change_type_defensively, axis=1)

....: acc.head(5)

....:

Out[35]:

VID ... population_int

0 41158132.0 ... 5435

1 41158133.0 ... 15989

2 41158134.0 ... 3369

3 41158135.0 ... 10793

4 41158136.0 ... 4514

[5 rows x 6 columns]

Here we demonstrate a more defensive strategy to convert datatypes. Many operations can cause Exceptions and then you can’t ignore the problem anymore because your code breaks.

But with try - except we can catch expected exception (aka crashes) and react appropriately.

Pandas (and therefore also Geopandas) also provides an in-built function that provides similar functionality to_numeric() ,

e.g. like so data['code_tonumeric_int'] = pd.to_numeric(data['code_12'], errors='coerce'). Beware, to_numeric() is called as pandas/pd function, not on the dataframe.

Both versions will at least return a useful NaN value (not_a_number, sort of a nodata value) without crashing. Pandas, Geopandas, numpy and many other Python libraries have some functionality to work with or ignore Nan values without breaking calculations.

# Classify the data

In [36]: acc['population_classes'] = acc[['population_int']].apply(classifier)

# Let's see what we have

In [37]: acc.head()

Out[37]:

VID KOOD ... population_int population_classes

0 41158132.0 0698 ... 5435 0

1 41158133.0 0855 ... 15989 2

2 41158134.0 0732 ... 3369 0

3 41158135.0 0917 ... 10793 1

4 41158136.0 0142 ... 4514 0

[5 rows x 7 columns]

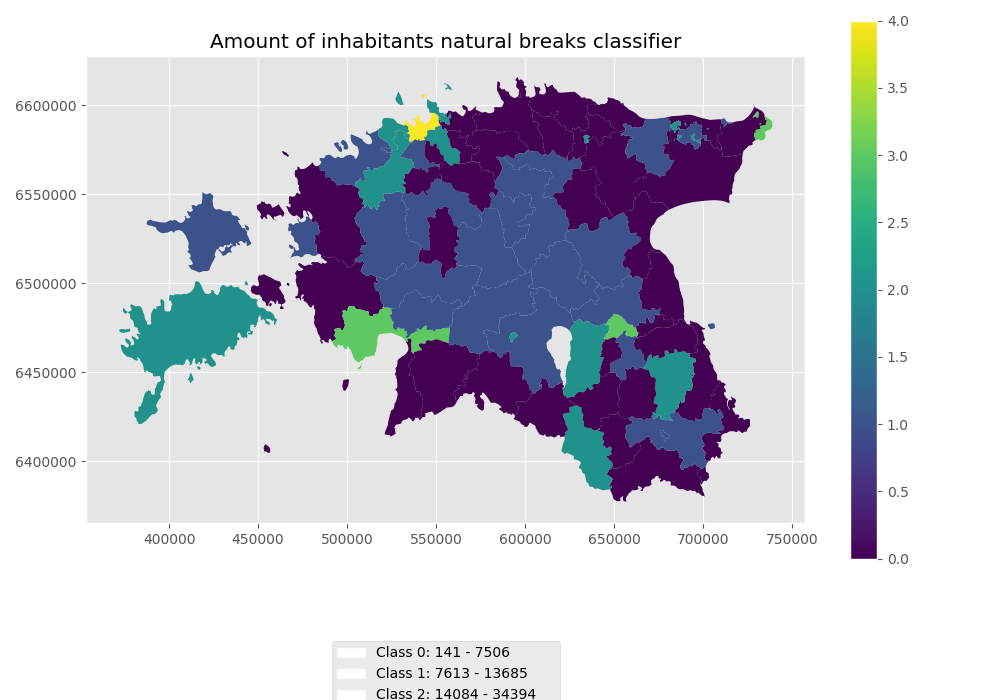

Okey, so we have added a column to our DataFrame where our input column was classified into 5 different classes (numbers 0-4) based on Natural Breaks classification.

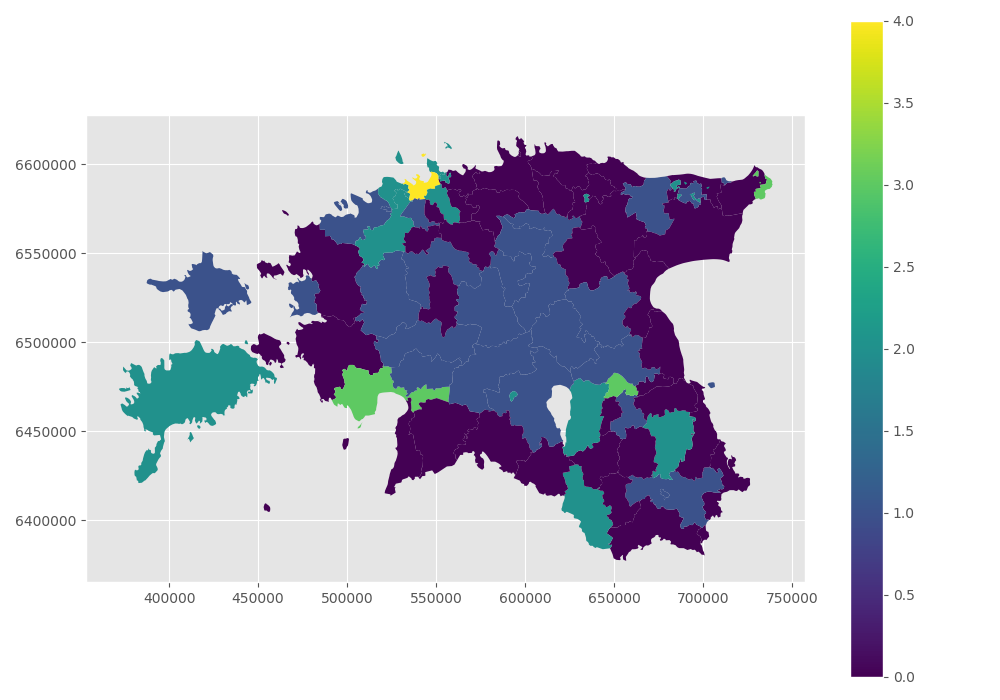

Great, now we have those values in our population GeoDataFrame. Let’s visualize the results and see how they look.

# Plot

In [38]: acc.plot(column="population_classes", linewidth=0, legend=True);

# Use tight layour

In [39]: plt.tight_layout()

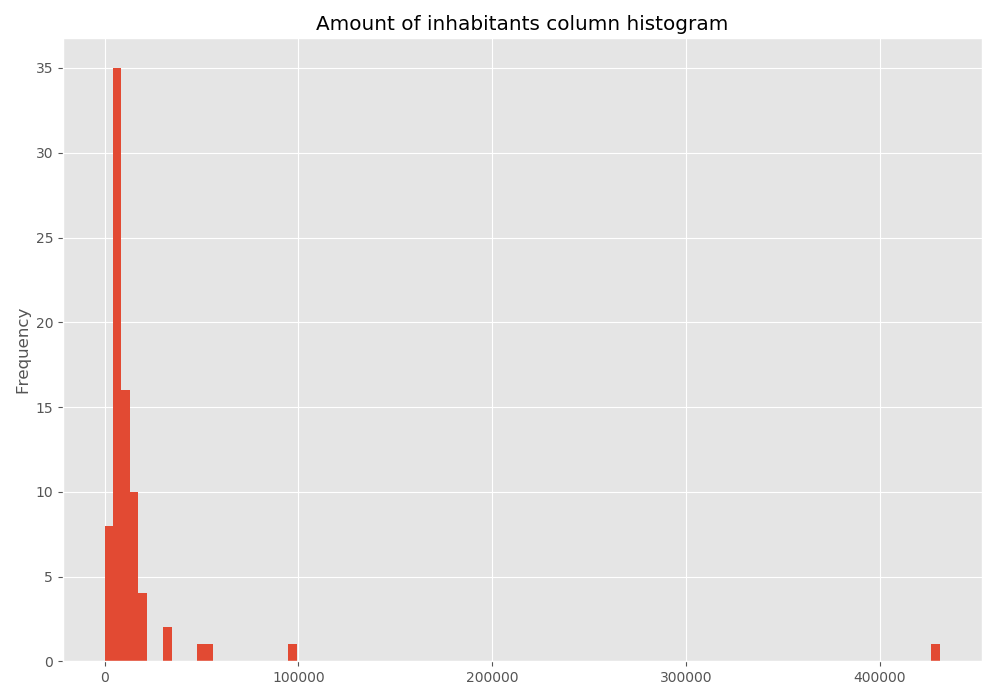

Now we have the plot, but it would be great to know the actual class ranges for the values. So let’s plot the histogram again.

# Plot

In [40]: fig, ax = plt.subplots()

In [41]: acc["population_int"].plot.hist(bins=100);

# Add title

In [42]: plt.title("Amount of inhabitants column histogram")

Out[42]: Text(0.5, 1.0, 'Amount of inhabitants column histogram')

In [43]: plt.tight_layout()

In order to get the min() and max() per class group, we use groupby again.

In [44]: grouped = acc.groupby('population_classes')

# legend_dict = { 'class from to' : 'white'}

In [45]: legend_dict = {}

In [46]: for cl, valds in grouped:

....: minv = valds['population_int'].min()

....: maxv = valds['population_int'].max()

....: print("Class {}: {} - {}".format(cl, minv, maxv))

....:

Class 0: 141 - 7506

Class 1: 7613 - 13685

Class 2: 14084 - 34394

Class 3: 50403 - 96506

Class 4: 430805 - 430805

And in order to add our custom legend info to the plot, we need to employ a bit more of Python’s matplotlib magic:

In [47]: import matplotlib.patches as mpatches

In [48]: import matplotlib.pyplot as plt

In [49]: import collections

# legend_dict, a special ordered dictionary (which reliably remembers order of adding things) that holds our class description and gives it a colour on the legend (we leave it "background" white for now)

In [50]: legend_dict = collections.OrderedDict([])

#

In [51]: for cl, valds in grouped:

....: minv = valds['population_int'].min()

....: maxv = valds['population_int'].max()

....: legend_dict.update({"Class {}: {} - {}".format(cl, minv, maxv): "white"})

....:

# Plot preps for several plot into one figure

In [52]: fig, ax = plt.subplots()

# plot the dataframe, with the natural breaks colour scheme

In [53]: acc.plot(ax=ax, column="population_classes", linewidth=0, legend=True);

# the custom "patches" per legend entry of our additional labels

In [54]: patchList = []

In [55]: for key in legend_dict:

....: data_key = mpatches.Patch(color=legend_dict[key], label=key)

....: patchList.append(data_key)

....:

# plot the custom legend

In [56]: plt.legend(handles=patchList, loc='lower center', bbox_to_anchor=(0.5, -0.5), ncol=1)

Out[56]: <matplotlib.legend.Legend at 0x1501dfb3198>

# Add title

In [57]: plt.title("Amount of inhabitants natural breaks classifier")

�����������������������������������������������������Out[57]: Text(0.5, 1.0, 'Amount of inhabitants natural breaks classifier')

In [58]: plt.tight_layout()

Todo

Task:

Try to test different classification methods ‘Equal Interval’, ‘Quantiles’, and ‘Std_Mean’ and visualise them.